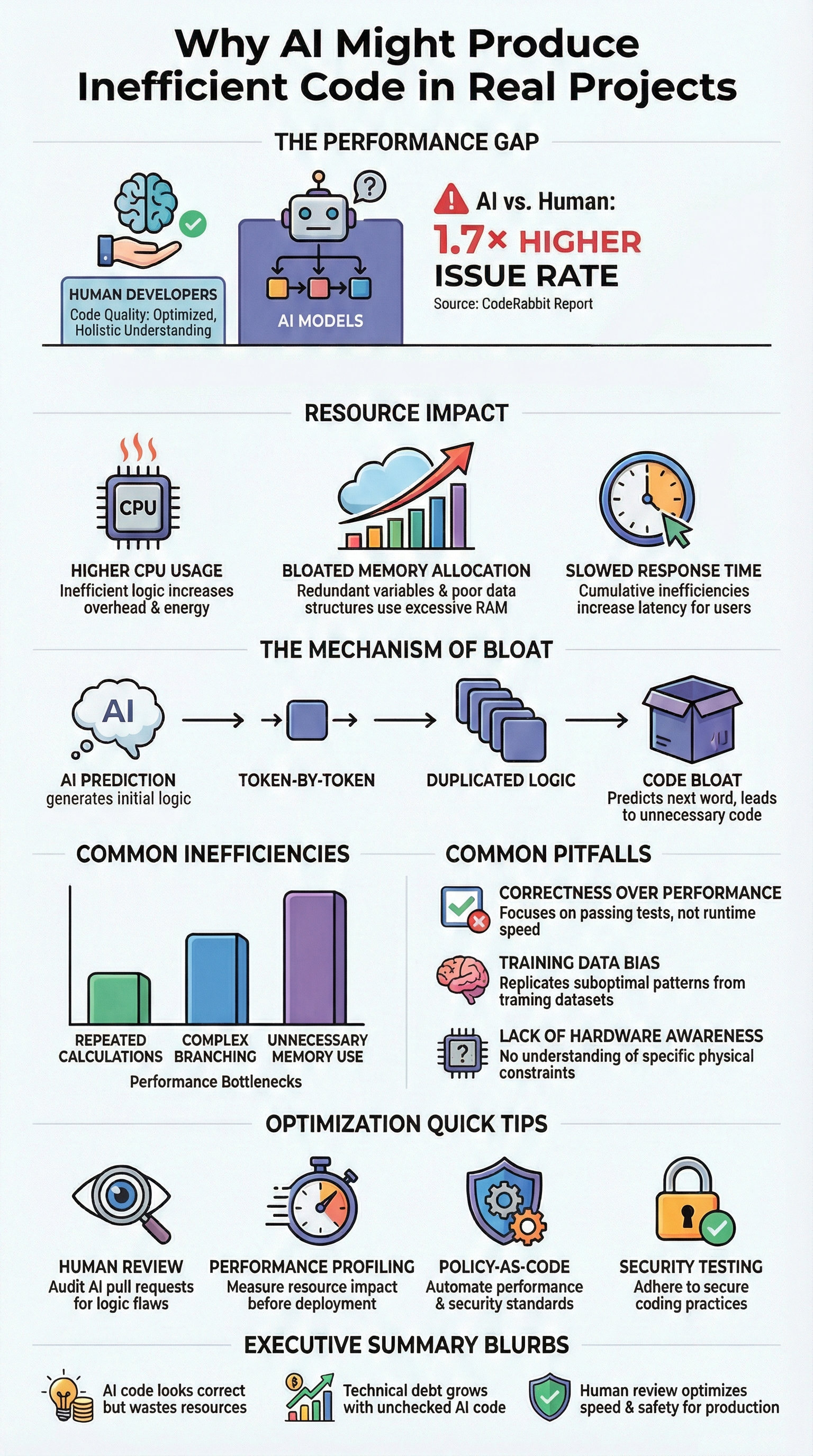

AI often produces inefficient code because it predicts patterns and aims to pass tests, not to optimize performance. It focuses on what looks correct, not on what runs lean under pressure. In projects we’ve reviewed, this shows up as higher CPU usage, unnecessary memory allocation, and services that struggle when real traffic hits.

The code works on paper, but it wastes resources in production. As more teams ship AI-generated code quickly, these inefficiencies compound. Understanding where they come from helps we decide when to trust automation and when to intervene. Keep reading to see the common pitfalls and how secure coding practices address them.

Core Reasons AI Might Produce Inefficient Code

- AI code generation cares more about looking correct than running well.

- Training data flaws and prediction errors copy bad patterns at scale.

- Applying secure coding practices early cuts performance risks and long-term technical debt.

Why does AI-Generated Code Often Prioritize Correctness Over Performance?

AI code focuses on being correct because the models are rewarded for producing output that compiles and passes basic checks. They aren’t judged on execution speed or memory footprint.

In our reviews, we see this play out. Generated functions often include extra defensive checks, wordy logic, and unnecessary layers of abstraction. The tests pass, so everyone moves on. The performance cost, like slower API responses or higher cloud bills, only shows up later.

We’ve noticed AI usually picks “safe” implementations that look like common examples online. That means using generic lists where a simple array would do, or making needless copies of data “just in case.” Industry estimates suggest a large majority of AI snippets favor correctness over any optimization.

This makes sense when thinking about how these models are trained. They succeed when the code runs, not when it runs fast. Our secure coding practices force us to review generated code for both safety and efficiency before it ever reaches production, catching these issues early.

How do Training Data Biases Lead to Inefficient Patterns?

AI learns bad habits because it’s trained on public code, and let’s be honest, most public code isn’t optimized. It’s just code that works well enough to ship.

These models learn from millions of GitHub repos, where getting a feature out the door is often more important than making it elegant or fast.

Studies of these repositories show a lot of duplicated logic and suboptimal solutions. As explained in the research,

“Optimization functions push the system toward the most statistically efficient patterns… Each iteration strengthens the same patterns, regardless of whether they are accurate” – Diabolocom.

We’ve tested this ourselves. Ask an AI to solve a problem with known performance constraints, and it will often give ourselves a familiar but slow answer, like using a nested loop, simply because that’s the pattern it saw most often.

The model can’t tell the difference between “popular” and “good.” It just mimics what’s statistically common. That’s why our process doesn’t stop at the AI’s output. Our secure coding practices require a human to review the algorithm and data structures, asking if there’s a better way, not just a common one.

What Limitations Prevent AI From Understanding Hardware-Level Optimization?

AI can write code that works. But it doesn’t truly understand the machine underneath that code. It doesn’t “see” the CPU, memory layout, or how instructions move through a processor.

Modern performance depends on small hardware details. For example, when code jumps around in memory, it can cause cache misses. That alone can slow a program by 30–50%. The logic may be correct, but the structure hurts speed. AI won’t notice that.

It also struggles with low-level concerns like:

- CPU cache behavior and memory alignment

- Branch prediction and pipeline stalls

- SIMD vectorization for faster loops

- Power usage in embedded systems

- Strict timing limits in real-time software

In practice, we often see AI place complex conditions inside performance-critical paths. It rarely suggests restructuring a loop to take advantage of hardware instructions. In embedded or real-time systems, this gap becomes even more serious because timing must be exact.

That’s why we rely on secure coding practices from the beginning. We deliberately review hardware-level constraints early, instead of assuming the AI handled them.

How do Token-by-Token Predictions Create Code Bloat?

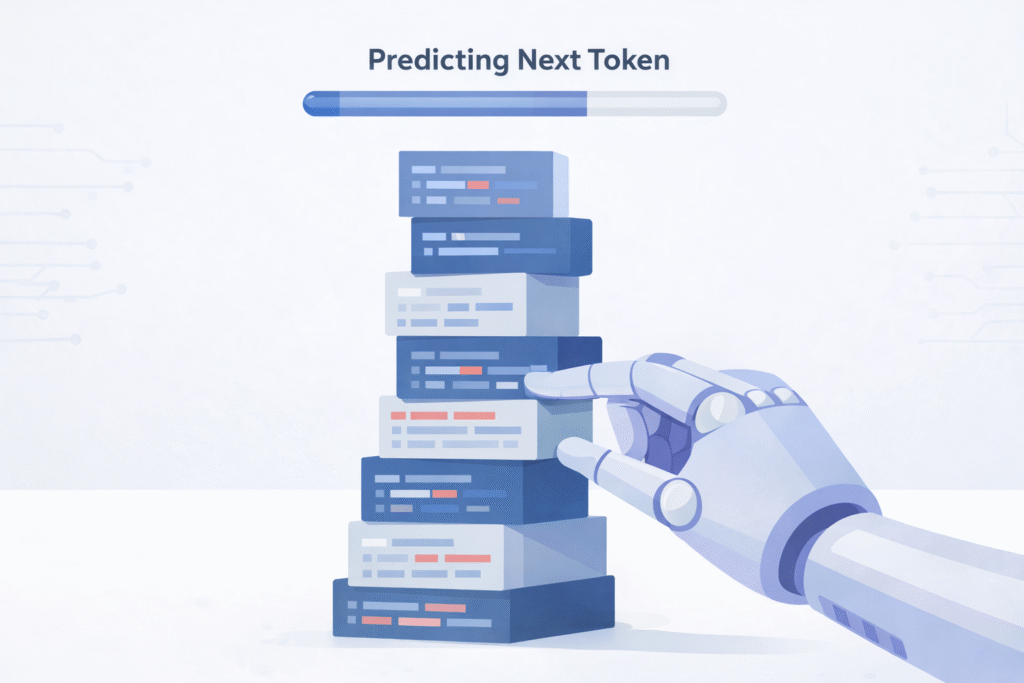

AI writes code one token at a time. It predicts the next word or symbol based on patterns it has seen before. That approach works well for short snippets. But when the code gets longer, small imperfections start to stack up.

Even if each prediction is 99% correct, the odds that a long function is perfectly structured drop fast. Tiny issues compound. Over time, that leads to code bloat.

We might see things like:

- The same validation logic repeated in two different places

- A long helper function where one clear line would work

- Extra variables that don’t add real value

- Nested conditions that could be simplified

- Defensive checks that overlap

Each piece looks harmless on its own. Together, they make the system heavier, slower, and harder to read.

This pattern reflects many of the broader challenges and common pitfalls teams face when relying too heavily on automated output without review.

That’s why we treat AI-generated code as a draft, not a finished product. With secure coding practices, we merge duplicate logic, tighten up structure, and simplify control flow before release. Without that cleanup step, small inefficiencies quietly grow into larger maintenance problems.

Why does AI Struggle With Scalability and Long-Term Maintainability?

AI is very good at solving the task in front of it. Give it a clear prompt, and it will produce working code. The problem is that it focuses on the present requirement, not the future of the system.

Software rarely stays the same. Data grows. Users increase. Features expand. AI does not naturally plan for that growth. It writes for today’s input, not next year’s scale.

As a result, we often see:

- Hard-coded limits that break under real traffic

- Large functions that handle too many responsibilities

- Tight coupling between components

- Weak separation between business logic and infrastructure

- Minimal thought about logging, monitoring, or future refactoring

The code may pass tests with small datasets. But under real-world load, performance drops and bugs appear. Then every update feels risky because no one fully understands how pieces connect.

We’ve seen teams move fast with AI at first, then slow down later when maintenance becomes painful. That’s why we apply secure coding principles early. Clear structure and modular design make growth manageable instead of chaotic.

What Common Inefficiencies Repeatedly Appear in AI-Generated Code?

Certain inefficiencies show up again and again in AI-generated code. The model often repeats patterns it has seen before, without weighing the real performance cost. The code works, but it is not always lean.

Industry data supports this pattern. In the CodeRabbit engineering report “AI vs human code gen report: AI code creates 1.7x more issues”, researchers found that

“Performance inefficiencies showed the largest relative jump… excessive input/output operations appeared nearly eight times more frequently in AI-generated submissions.” – David Loker.

Here are some common examples:

| Issue | Real-World Impact |

| Repeated calculations | Extra CPU work that slows response time |

| Duplicate logic blocks | Harder updates and higher bug risk |

| Overly complex branching | Lower throughput due to pipeline stalls |

| Unnecessary memory allocation | Increased memory pressure and slower execution |

Studies on modern processors suggest poor branching alone can reduce throughput by around 20% in some workloads. That is not a small drop. In many cases, these inefficiencies are closely related to the problem with complex if/else AI logic, where layered conditional paths create both performance drag and maintenance risk.

We also see:

- Extra loops that could be merged

- Temporary variables that add noise

- State handling that feels scattered

One issue alone may not break a system. But layered together, they reduce speed and stability.

That is why we run a secure coding review before deployment. We check hot paths, remove duplication, and simplify control flow so small inefficiencies do not turn into long-term performance problems.

Why do Developers Report “AI Slop Code” in Real Projects?

Many developers use the phrase “AI slop code” to describe something frustrating. The code runs. It passes basic tests. But no one on the team fully understands how it works or why certain choices were made.

That creates a strange tension. Progress feels fast at first. Features ship quickly. Then problems start to appear during maintenance.

Common complaints include:

- Debugging takes longer than expected

- Logic feels opaque or overly complex

- Variable names lack clear meaning

- Hidden edge cases surface later

- Small changes break unrelated parts of the system

We have seen this pattern ourselves. A feature gets built in record time. Later, a small update turns into hours of tracing through nested conditions and scattered logic. Confidence in the codebase starts to drop, and developers hesitate to make changes.

Many of these frustrations overlap with what are often described as the main risks of vibe coding, where speed takes priority over structure and clarity.

That is why we rely on secure coding practices as a checkpoint. We review structure, improve clarity, add meaningful tests, and document intent. The goal is simple: turn the AI’s rough draft into code the entire team can understand, trust, and maintain over time.

Can AI-Generated Code be Optimized Effectively by Humans?

Yes, AI-generated code can be improved by humans. But it only works well if we treat the AI like a junior assistant, not the final decision-maker. The AI gives us a draft. An experienced developer turns that draft into something ready for production.

Research and real-world testing show that profiling and refactoring can recover anywhere from 25% to 60% of lost performance. That is a major gain. The key is having a clear process instead of assuming the first version is good enough.

Strong teams usually:

- Run performance profiling to locate real bottlenecks

- Simplify complex logic before optimizing

- Remove duplicated or unnecessary steps

- Select data structures based on actual workload needs

- Re-test after each meaningful change

Optimization is not about guessing. It is about measuring, adjusting, and validating.

When we combine AI’s drafting speed with disciplined secure coding practices, the results improve significantly. The AI accelerates the starting phase. Human review ensures the final system is efficient, understandable, secure, and built to handle growth over time.

FAQ

What are common AI code generation pitfalls?

Common AI code generation pitfalls often come from statistical pattern replication errors. Models copy patterns from mixed training data quality issues without judging long-term cost. This behavior leads to redundant computations in AI output, duplicate code clutter bases, and verbose AI-generated functions that are harder to maintain.

Anti-pattern propagation training spreads weak structures across projects. Over time, these issues increase maintainability debt accumulation and trigger a technical debt explosion AI teams must later repair.

Why do LLM token prediction flaws hurt performance?

LLM token prediction flaws stem from probabilistic next-token inefficiency. The model predicts what looks correct based on patterns, not what performs best in production. This approach results in inefficient algorithm selection and poor data structure choices by models.

Context window limitations code also reduce long-range reasoning. These weaknesses create throughput degradation scripts, noticeable latency spikes inefficient gen workloads reveal, and performance regression risks during scaling.

How does lack of hardware awareness in codegen affect systems?

Lack of hardware awareness in codegen means the model ignores real processor behavior. Cache locality neglect LLMs display and branch prediction ignorance models repeat can reduce performance. We may also see pipeline stall loops generated and vectorization missed opportunities AI fails to consider.

Dynamic allocation overuse AI inserts increases memory pressure. In embedded systems code fails AI cases, real-time constraints ignored models cause non-deterministic timing AI problems and power consumption blind spots LLMs rarely address.

Why does AI output struggle with scalability bottlenecks?

Scalability bottlenecks AI code faces often result from structural design choices. Monolithic structures collapse under growth, while microservices bloat AI designs increase operational complexity.

Models frequently introduce database query waste models produce and network call redundancy generated code repeats. Fragmentation from allocations and garbage collection overheads add further strain. These factors raise churn rate spikes AI output triggers and increase performance regression risks as traffic grows.

How can AI-generated scripts create debugging paradoxes?

Debugging paradoxes generated scripts create occur when phantom logic branches AI inserts hide real control flow. Subtle edge case misses make failures difficult to reproduce. Concurrency bugs AI code introduces and race condition oversights LLMs miss increase instability.

Deadlock prone patterns output complicate tracing. Static analysis flags ignored and unit test evasion tendencies reduce early detection. These combined problems fuel integration hell scripts and long-term maintainability debt accumulation.

Why AI Might Produce Inefficient Code and What Teams Should Do Next

AI produces inefficient code because it predicts patterns, not engineered outcomes. It does not factor in execution cost, hardware limits, or future scale. We have reviewed projects where fast AI output quietly increased technical debt.

Without discipline, that debt compounds. With the right process, it does not have to. In our bootcamp, we train developers to apply secure coding practices that keep speed and quality aligned. Ready to build safer code? Join us

References

- https://www.diabolocom.com/blog/bias-ai-models-learn-drift/

- https://www.coderabbit.ai/blog/state-of-ai-vs-human-code-generation-report