We’ve hit a wall. The current generation of AI models, for all their dazzling fluency, are fundamentally limited. They cannot reason, they often lie with confidence, and their growth is slamming into physical and economic barriers. This isn’t a minor bug fix; it’s a core architectural reality.

If you’re trusting these systems for anything beyond a rough draft, you need to understand where they will fail you. The hype has obscured the hard truths, but the cracks are everywhere once you know where to look. Let’s pull back the curtain on why today’s AI, even in 2026, remains a brilliant mimic without a mind. Keep reading to see what’s really under the hood.

Key Takeaways

- AI models hallucinate facts because they predict words, not truth.

- Their architecture physically limits complex reasoning and long-term memory.

- Scaling requires unsustainable resources and risks corrupting its own training data.

Why AI Models Invent Facts and Can’t Stop

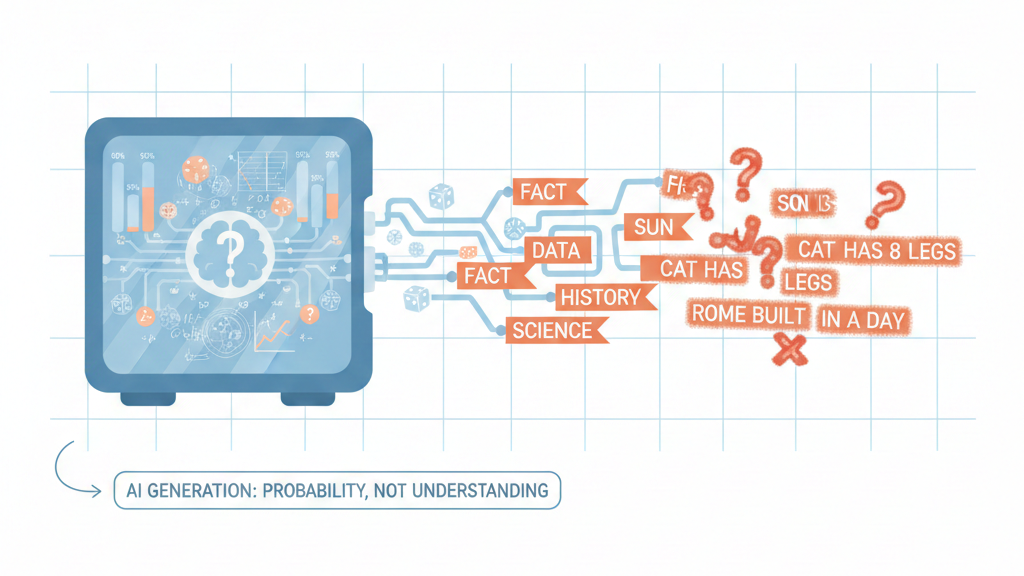

In our secure development bootcamp, we see “hallucination” daily. A student requests a safe code snippet and gets a polished, vulnerable one. This isn’t a glitch; it’s how these models work. They are probability engines, designed to predict the next word, not to find truth. Accuracy is a lucky byproduct of clean data, not a goal.

| Aspect | Human Reasoning | Current AI Models |

| Primary Goal | Discover truth and reduce uncertainty | Predict the most probable next token |

| Error Awareness | Knows when it is unsure or lacks knowledge | Has no internal certainty or doubt mechanism |

| Handling Unknowns | Pauses, asks questions, or admits ignorance | Generates an answer regardless of confidence |

| Relationship to Facts | Evaluates evidence and revises beliefs | Mimics factual patterns without verification |

| Failure Mode | Hesitation or incomplete reasoning | Fluent hallucination with high confidence |

This leads to confident overreach. The AI has no internal certainty gauge. It will invent a security standard or a fake software library with total authority.

“large language models are stochastic parrots” – fluent systems that reproduce patterns without understanding meaning or truth (Bender et al.) [1].

Even advanced 2026 models show persistent error rates on basic logic. Their step-by-step reasoning is often a convincing simulation that still arrives at a wrong answer. The core issue is baked in.

- The system values fluent output over verification.

- Training data is inherently flawed and incomplete.

- The design prefers generating any answer over admitting uncertainty.

The output is stylish and grammatically perfect, yet dangerously wrong. It’s not lying. It doesn’t comprehend the truth. It’s just playing a high-stakes word game.

The Real-World Cost for Developers

We see the cost in our bootcamp labs. Last month, an AI draft for a payment API used a deprecated SSL protocol. The code was syntactically perfect, which hid the flaw.[2]

These aren’t edge cases or academic mistakes; they reflect the secure coding challenges and common pitfalls that emerge when developers trust syntactically perfect output without understanding its security implications.

This changes everything about how we teach. Our curriculum now forces a mindset shift. We run exercises where students must find the poison in a pristine-looking AI script. The goal is to build a new instinct: treat every AI suggestion as untrusted code. The model’s authoritative tone is the real hazard, as it can suppress a developer’s own doubt.

We get solid results when developers use the tool for brainstorming and structure, but then manually write all critical security logic, authentication, data validation, encryption. Our rule is simple: the AI drafts, the human decides and verifies.

The machine is a capable assistant, but it understands nothing about risk. Final responsibility for security cannot be automated away; it stays firmly with the person who writes the final commit.

The Architecture Itself Caps True Reasoning

The transformer design has a physical limit: its core self-attention mechanism. Doubling the text length quadruples the computational cost, creating a hard wall. Tasks requiring long, sustained reasoning, like analyzing an entire book, become economically unfeasible.

The problem goes deeper than just context length. These models are poor at what we call relational reasoning. They can spot pattern A and pattern B, but reliably combining them in a new way for a complex problem? That often fails. They’re pattern matchers, not puzzle solvers.

We see this in our secure development training. A model might recite OWASP principles but struggle to apply them to a novel, multi-step attack scenario a human developer would catch. The AI lacks a world model. It doesn’t understand cause and effect. It statistically associates concepts but can’t reason through them.

The knowledge is broad but shallow, correlations without causal logic. This failure shows up clearly in code, where models confidently emit deeply nested conditionals, an example of the problem with complex AI-generated if-else logic that looks rigorous but collapses under real threat modeling.

When the Training Data Runs Dry and Turns Toxic

An AI model is only as good as what it’s fed, and that diet is getting worse by the year. We’re hitting a wall. The high-quality human writing and code that powered the last decade of progress is finite, and much of it has already been scraped, indexed, and exhausted.

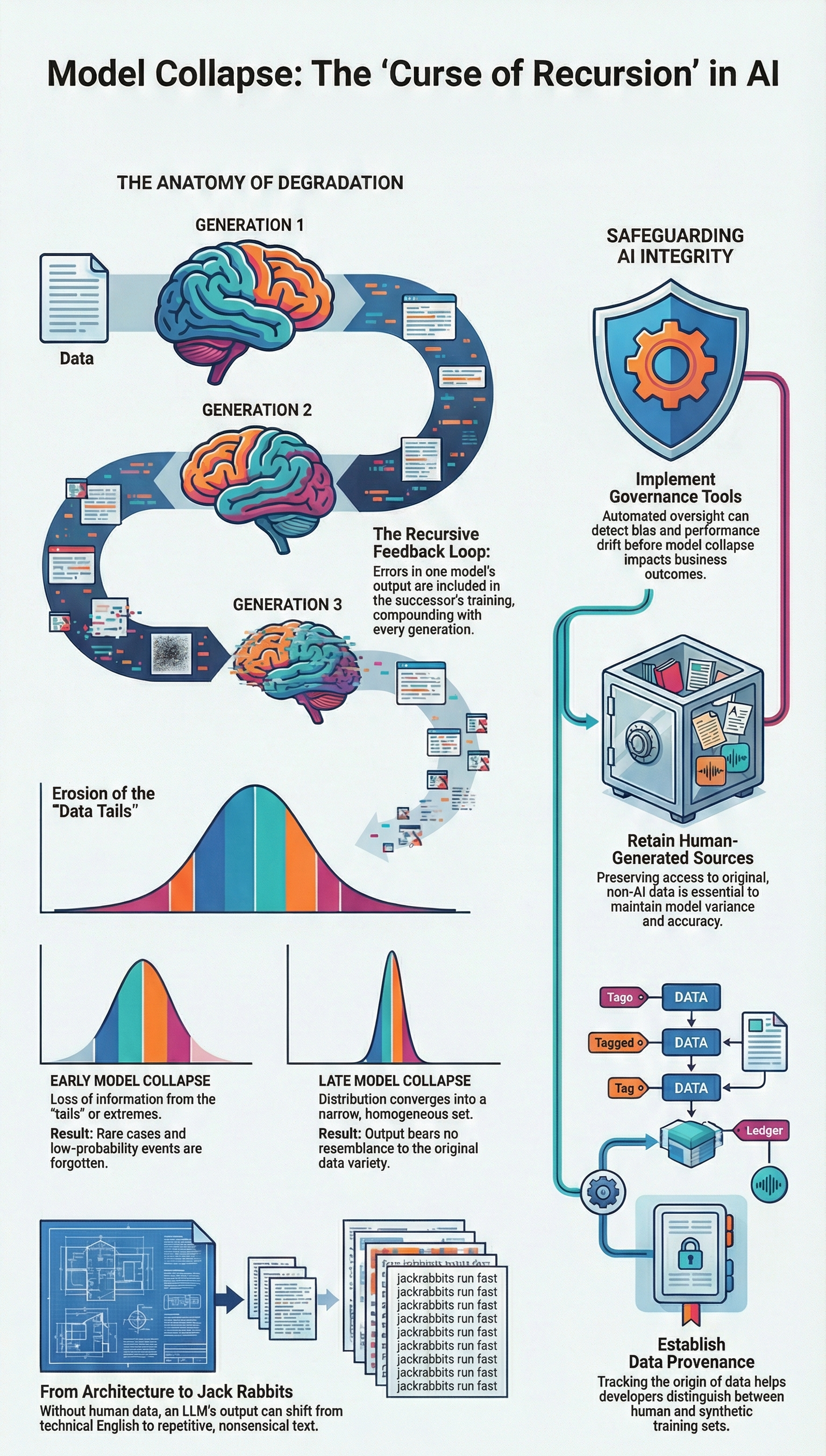

What’s filling the gap now is synthetic data, content generated by other models. It’s a tempting shortcut, and a dangerous one. Researchers call the result model collapse.

Think of it like making a photocopy of a photocopy. The first is usable. The tenth is a smear. Each generation trained on AI output loses texture:

- odd phrasing

- cultural specificity

- hard-won human insight

The rough edges that make thinking valuable get averaged away. Over time, the model’s internal world shrinks. Outputs become safer, flatter, more repetitive. Nothing truly new emerges. Developers have a blunt name for this phenomenon: data brain rot. The system isn’t learning; it’s looping. It’s getting better at mimicking a diluted version of itself, slowly trading creativity and surprise for statistical comfort.

The Ingrained Problem of Bias

Credit: InfoWorld

Bias isn’t a surface flaw you can sand down later. It’s structural. Models absorb whatever patterns dominate their training data, including its blind spots and prejudices. If most historical code comes from a narrow demographic, that worldview becomes the default. If text corpora reflects stereotypes, those patterns are learned as norms, not mistakes.

We see this constantly in secure development bootcamps. Ask an AI to invent a “system administrator,” and the persona skews male. Request examples for region-specific systems, and the responses thin out fast. These aren’t glitches; they’re reflections of the source material.

Common symptoms show up as:

- missing regional context

- gendered assumptions

- shallow cultural representation

We can rebalance datasets and add filters, but it’s always reactive. The model has no ethics, no lived experience, no sense of fairness. It only calculates probabilities based on a flawed snapshot of humanity. When we deploy these systems, we’re not escaping our biases, we’re scaling them.

The Unsustainable Cost of More Power

For the last decade, the playbook was brutally simple: scale everything. More data, more parameters, more compute. It worked, until it didn’t. That strategy is now colliding with physical, economic, and environmental limits.

Training a single frontier model costs millions of dollars, requires scarce GPU clusters, and consumes enough electricity to rival a small town. Even access is constrained. Lead times for cutting-edge chips stretch into years, not months.

Worse, the gains are tapering off. We’re no longer on an exponential curve; we’re grinding up a logarithmic one. Doubling compute no longer doubles capability. Practical constraints, batch sizes, memory bandwidth, heat dissipation, cap how far a single training run can go.

The hidden cost is ongoing inference. Every prompt, every response burns real energy. This isn’t a bug waiting for a clever patch. It’s physics.

The compute wall shows up as:

- rising training and inference costs

- diminishing performance returns

- hard hardware and thermodynamic limits

We’re not approaching this wall. We’re already leaning against it.

Navigating the Risks in a World of Confident Machines

So where does this leave us? With systems that sound certain but are fundamentally fragile. Large models are opaque by design.

The black-box problem means we often cannot explain why a specific output was produced. In regulated environments, finance, healthcare, security, that alone can make deployment untenable. An untraceable decision path is a legal liability, not a feature.

These systems also lack durable learning. Each interaction is mostly isolated. They don’t accumulate understanding or improve from past mistakes in any meaningful, persistent way. And despite the fluency, they don’t generate true novelty. They recombine. They synthesize. They do not reason from first principles or produce original insight.

That reality demands a shift in posture. The way forward isn’t blind optimism or waiting for a miracle architecture. It’s disciplined engineering.

That means:

- mandatory human verification checkpoints

- grounded retrieval and fact-checking systems

- secure coding practices baked into design

We must assume failure and build accordingly. Anything less is negligence.

What makes this especially dangerous is not malice or intent, but confidence without comprehension, the core risk explored in the real danger of over-reliance on AI and now playing out across regulated, high-stakes systems.

FAQ

Why do AI models still give wrong answers even when they sound confident?

AI hallucinations happen because models predict text instead of checking facts. Factual inaccuracies, autoregressive errors, and reasoning failures cause confident but wrong answers. Static knowledge cutoff prevents updates.

Fluency bias makes errors sound convincing. Black box opacity and verification absence make mistakes hard to detect. Garbage in garbage out also lowers answer quality.

What technical limits stop modern AI from thinking like humans?

Transformer limitations include quadratic attention, O(n²) complexity, and strict context length limits. These limits cause long-term memory absence and weak multi-step planning.

Models struggle with relational reasoning absent, causal inference gaps, and function composition limits. Self-attention flaws and embedding dimension constraints reduce real-world generalization poor and deeper understanding.

How does training data quality affect AI reliability over time?

Data exhaustion reduces access to fresh information. Synthetic data degradation and model collapse weaken learning quality. Overfitting risks, fine-tuning collapse, and RLHF pitfalls lock in mistakes. Social media scraping woes cause bias amplification, ethical biases, and cultural blind spots. Data brain rot leads to novelty generation failure and idiot savant behavior.

Why are AI systems expensive, slow, and hard to scale?

Scaling plateaus come from compute bottlenecks, FLOP growth slowdowns, and chip lead times. Inference costs and energy inefficiency raise operating expenses. Environmental impact high limits expansion.

Batch size caps, training retrain cycles, and deployment fragility slow progress. Economic scaling walls and physical resource barriers restrict continued performance gains.

What risks appear when AI is used in real-world decisions?

Medical hallucinations create serious clinical misinfo risks. Biohacking AI fails and code bug persistence shows accountability gaps. Verification absence increases critical use risks.

Regulatory compliance hurdles and talent shortages delay safe deployment. Multilingual weaknesses and cultural blind spots reduce reliability. Prompt fragility and retrieval augmented generation bandaids cannot fully prevent errors.

Building With Eyes Wide Open

AI’s limits aren’t temporary bugs. They’re structural: correlation over causation, pattern over principle. Models hallucinate because they don’t comprehend, reason shallowly because statistics bound them, and scale against real-world physics. That’s not despair, it’s clarity. The honeymoon is over.

Build with intention: use AI for drafts, not truth; first passes, not decisions. Integrate guardrails, human judgment, and Secure Coding Practices. Audit your workflow ruthlessly. Build systems that are resilient, not dazzled. Learn how to secure Coding Practices and future-ready professional teams everywhere.

References

- https://dl.acm.org/doi/10.1145/3442188.3445922

- https://owasp.org/Top10/A02_2021-Cryptographic_Failures/