You’re writing instructions, not just code now, shaping mood as much as logic, and the machine actually responds. Vibe coding in 2025 grew out of one post, then a thousand demos, then a quiet revolution: people talking to computers the way they talk to friends or teammates.

It’s the latest step in a long arc of hiding complexity behind better language, and while it’s supercharged what individuals and small teams can ship, it’s also opened doors to new kinds of bugs, leaks, and misuse. Keep reading to see how we got here, what changed, and what’s next.

Key Takeaways

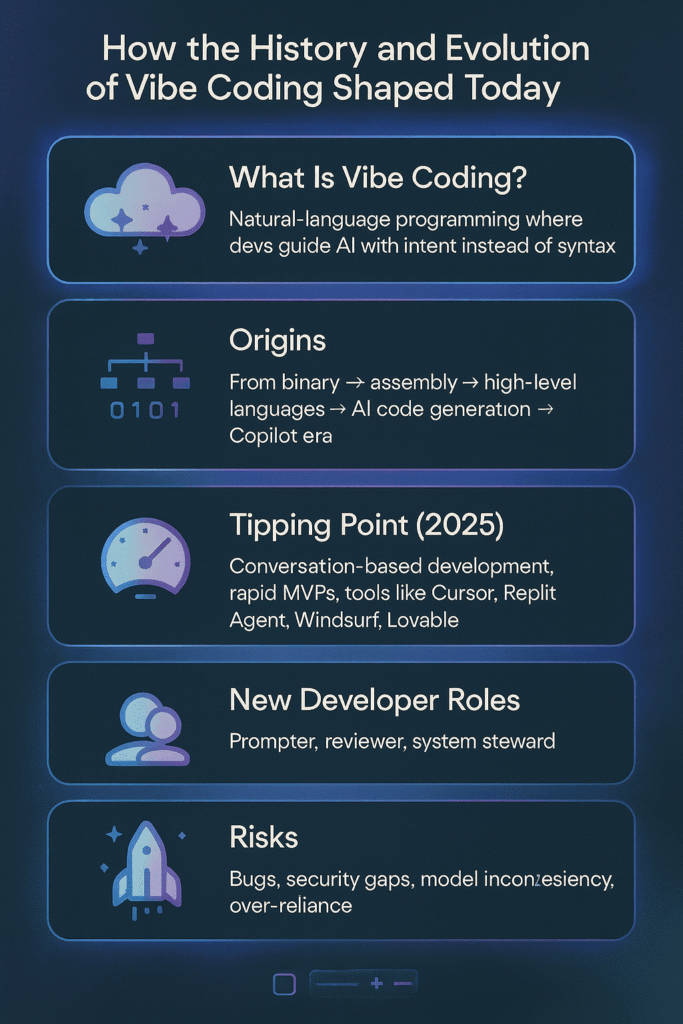

- Vibe coding evolved from decades of abstraction, culminating in AI that understands high-level intent.

- It enables rapid prototyping but introduces new challenges in code security and scalability.

- The developer’s role is shifting from coder to prompter, requiring a hybrid skill set.

The Long Road to Natural Language

You can trace vibe coding back to a simple wish: we want to talk to computers the way we talk to each other. That’s not a side story, that is the history of programming. At first, all we had was binary, raw ones and zeros.

Then assembly came along with mnemonics like ADD and JMP, tiny handles our brains could actually grab. That was the first big lift away from the metal. High-level languages like FORTRAN, C, and later Python and JavaScript pushed us even further, letting us think in loops, functions, and data structures instead of registers and opcodes.

Each new layer didn’t just make code shorter, it made it feel more like thought.

Early AI helpers were small and almost shy. Autocomplete filled in variable names. Linters pointed out missing semicolons, unused imports, simple logic slips. Useful, yes, but not mind-bending.

The real shift started when research groups began asking a deeper question: what if code itself could be treated like language? Projects like Microsoft’s DeepCoder in the late 2010s hinted at an answer.

Given input-output examples, a neural network could assemble short programs that worked. These were tiny scripts, not full systems, but the point landed,programs formed patterns, and those patterns were learnable.

Several ingredients started to align:

- Transformer models that could handle long-range context in text and code.

- Massive public code corpora, especially from GitHub, exposing models to billions of lines.

- Reinforcement learning and feedback loops that nudged models toward more correct, more practical outputs.

Then GitHub Copilot arrived in 2021 and turned the ground soft under everyone’s feet. Built on OpenAI’s Codex, it could read a comment like “// fetch user by id and handle errors” and spin out full functions.

Some teams reported productivity boosts in the 30–50 percent range. In fact, a recent enterprise‑scale study showed that integrating AI-assisted tools led to a 31.8% reduction in review‑cycle time, and top‑adopting teams pushed 61% more code to production , reflecting a substantial uplift in throughput when AI is used properly. [1]

But the deeper change was cognitive: developers stopped treating the editor as a blank page and started treating it as a conversation partner, offloading boilerplate and focusing their energy on structure, intent, and edge cases.

That mental shift was the bridge from code completion to vibe coding. By 2025, about 84% of developers use or plan to use AI tools , a clear sign that this conversational coding norm is mainstream. [2]

The Tipping Point and Its Tools

The shift didn’t start with a product launch or a research paper, it started with a feeling that finally got a name. Karpathy’s 2025 post didn’t invent vibe coding, it just held up a mirror. A lot of developers read it and thought, “Yes, that’s exactly what I’ve been doing.”

Once there was a word for it, the dam kind of cracked. Conversation became the new default interface, and code started to feel like a byproduct instead of the main event, capturing the core philosophy of this new way of building software.

From there, the tools came fast:

- Cursor turned the editor into a dialogue, where you could argue with your own code.

- Replit Agent folded AI into the environment so deeply that “run, fix, refactor” felt like one continuous loop.

These weren’t just plugins. They made the whole workspace feel like you were pairing with a tireless, sometimes overeager, partner.

Founders and small teams grabbed onto this right away. Inside accelerators like Y Combinator, the pitch was simple and hard to ignore: one person, one laptop, one weekend,ship an MVP that used to must a full team and a month. Tools like:

- Windsurf, aimed at building polished frontends from natural language.

- Lovable, oriented around spinning up whole applications from prompts.

All them leaned into the same promise: describe what you want, and the system will scaffold, generate, and refine the rest. The floor for building software dropped, and keeps dropping.

That drop came with a hidden fracture in the role of “programmer.” You weren’t just a coder anymore. You became:

- A prompter, responsible for shaping clear, detailed intent.

- A reviewer, scanning AI-generated code for logic gaps, security issues, performance traps.

The momentum felt amazing,features appeared in hours, experiments multiplied, prototypes that would’ve died in a notebook now ran in production-like environments.

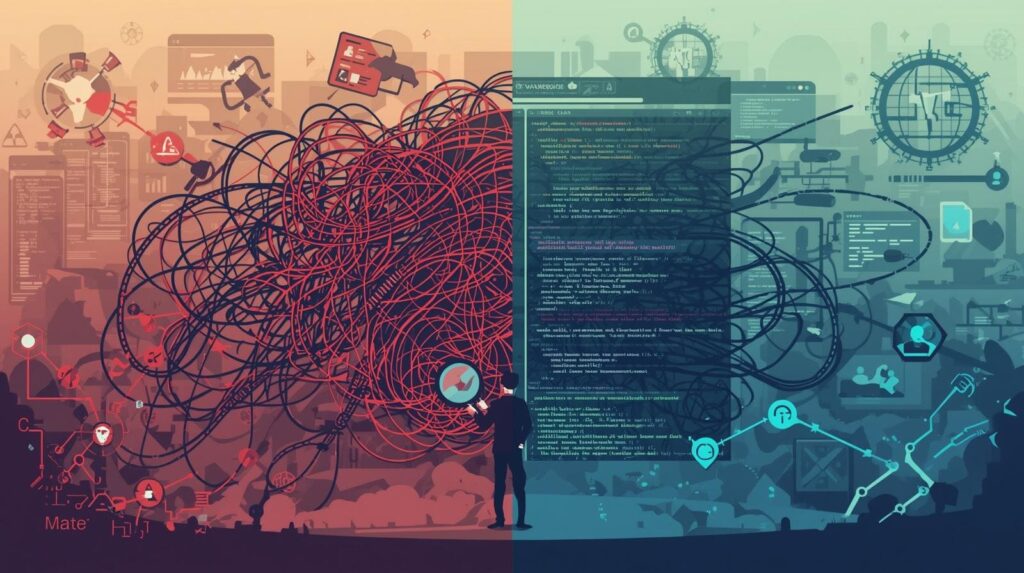

But a quiet question followed close behind each commit: just because the code was fast to write, did it deserve to be trusted? Was that clever-looking function actually safe under load, under attack, under weird edge cases no one had spelled out? That tension,between speed and scrutiny,is where vibe coding stopped being a novelty and became a discipline.

Vibe Coding as a Discipline, Not a Party Trick

Once the initial “wow” faded, the real work began. Vibe coding turned out to be less about asking the model for magic, and more about learning how to aim it.

Good practitioners started doing a few repeatable things, the real fundamentals of working with AI-generated code:

- Writing clear specs in plain language before writing prompts.

- Setting constraints: performance budgets, security rules, style guides.

- Iterating in small loops: “generate → run → break → ask why → refine.”

Prompts became closer to micro-design docs:

“You are writing a handler for untrusted input.

It must sanitize HTML, reject scripts, and log suspicious patterns.

Latency budget: 20ms p95.

Max dependency count: 1 external library.”

Instead of “write me a function to clean user input,” the developer now framed intent, risk, and quality all together. The AI still wrote the lines, but the human set the boundaries.

Vibe coding in that sense isn’t lazy. It’s demanding. It forces you to think harder about what you actually want, before a single token of code hits the file.

Secure Vibe Coding and AI-Driven Code Security

Security, which used to feel like a specialization, moved right into the center of everyday work. Secure coding practices stopped being a “nice to have” add-on and turned into baseline survival skills.

Teams started to:

- Enforce structured, human-led code reviews for AI-generated changes.

- Integrate automated security scanners (SAST/DAST) directly into CI/CD pipelines.

- Track dependency risk more carefully, since AI often pulled in libraries without context.

- Add security clauses into prompts: “no eval,” “no dynamic SQL,” “sanitize all external input.”

AI itself became a security tool, not just a risk:

- Models used as static analyzers, flagging insecure patterns across large codebases.

- Log analysis agents scanning huge volumes of traces for anomaly patterns humans miss.

- Policy-aware agents that reject code suggestions violating organizational rules.

We ended up in a strange but useful place: AI both creates new attack surfaces and helps guard them. Whether that balance tilts safe or unsafe depends a lot on how seriously teams take review and testing.

The Inevitable Hangover and the Path Forward

Every rush has a morning after, and vibe coding was no exception. Once the glow of instant features and one-weekend MVPs started to fade, people began to notice a pattern: when AI writes 95% of your codebase, you quietly inherit 95% of its blind spots, too.

That meant hidden vulnerabilities, awkward architectures, leaky abstractions, and services that worked fine in demos but struggled under real traffic. A few public failures,like Replit’s AI agent accidentally wiping a user’s database,turned what had been private worries into community-wide warnings.

Underneath the drama, a simple truth surfaced: you still need humans, just in different places.

The developer role stretched in new directions:

- Intent shaper – setting precise goals, constraints, and “vibes” the AI should follow.

- Code auditor – catching logic flaws, security gaps, and subtle performance issues.

- System steward – thinking about long-term maintainability, not just first-run success.

Security, which used to feel like a specialization, moved right into the center of everyday work. Secure coding practices stopped being a “nice to have” add-on and turned into baseline survival skills. Teams started to:

- Enforce structured, human-led code reviews for AI-generated changes.

- Integrate automated security scanners directly into CI/CD pipelines.

- Track dependency risk more carefully, since AI often pulled in libraries without context.

That mix,AI speed plus human skepticism,became the only sustainable way forward.

Looking ahead, vibe coding is less about raw generation and more about steady reliability. The tools are likely to evolve toward:

- Architecture-aware agents that understand systems across services, not just single files.

- Feedback-driven learning, where agents improve based on real outcomes and review comments.

- Richer interfaces: voice, diagrams, and domain-specific widgets layered on top of plain language.

The endgame isn’t just “more code, faster.” It’s a workflow where you describe behavior, constraints, and quality expectations, and the system produces code that’s not only runnable but dependable,while you stay accountable for what ships.

FAQ

How does vibe coding history help new developers understand today’s tools?

The vibe coding history shows how ideas grew from the vibe coding origin, natural language programming, and early LLM code generation experiments. People saw how English programming language prompts, transformer model coding, and massive repo datasets shaped today’s coding style.

This helps new developers see why context aware LLMs matter and how conversational coding loop habits formed.

What early breakthroughs shaped the evolution of vibe coding?

Many moments pushed the field forward, like the OpenAI Codex precursor, DeepCoder synthesis, and reinforcement learning code tests. GitHub dataset training also helped models learn real code.

These steps led to autocomplete to agents growth, higher production ready outputs, and early MVP rapid development patterns. They also showed how neural network automation changes software dev future paths.

How does voice command coding fit into the evolution of vibe coding?

Voice command coding grew as people tried voice GUI interfaces and natural language programming for simple tasks. Over time, this blended with AI dependent coding, high level intentions code, and syntax free development.

Tools now follow your words, keep creative momentum coding alive, and help non coder app building feel easier. But developers still need domain logic judgment.

What risks should teams consider as vibe coding adoption increases?

Teams face privacy concerns AI, vulnerability risks AI, and model consistency issues. They also see skill erosion risks when they rely too much on agents.

Code review necessity stays important because hybrid human AI skills guide safe choices. Good architecture oversight, bias mitigation code habits, and stronger security scans code help prevent development hell vibes.

How does vibe coding impact startups building fast MVPs?

Startups use vibe coding to push prototype acceleration and startup MVP hours down. Many solo dev productivity gains come from boilerplate automation, iterative refinement AI, and scalability challenges code awareness.

Some founders follow Andrej Karpathy vibe ideas or Y Combinator startups AI trends. Still, prompter reviewer role workflows keep projects steady and avoid vibe coding hangover issues.

Your New Role in the Vibe

I’ve noticed that vibe coding reflects our urge to move fast, but it doesn’t erase accountability, especially when security is on the line. AI can speed you up, but it also multiplies your mistakes if you don’t understand the fundamentals.

The developers who’ll stand out are the ones who combine AI fluency with secure coding skills. If you want structured, hands-on practice with real attacks and real fixes,not just theory, the Secure Coding Practices Bootcamp is a solid next step.

It helps you ship faster, safer code while keeping both the vibes and the security math in check.

References

- https://arxiv.org/abs/2509.19708

- https://www.index.dev/blog/developer-productivity-statistics-with-ai-tools