Don’t kid yourself – security in code isn’t some afterthought you tack on with a scanner. Real security runs deeper than that. Teams who get it right build defenses into their habits from day one, checking every input and mapping threats before writing a single line. We’ve trained hundreds of developers who thought they were secure until they saw how many holes their code had.

Some of the sharpest teams limit what their developers can access (seems harsh but works). Basic stuff like input validation isn’t optional anymore – not with injection attacks getting craftier each week. Their code holds up because they think like attackers, not just coders.

Want to stop playing catch-up with vulnerabilities? Keep reading for battle-tested ways to bake security into your development DNA.

Key Takeaways

- Writing secure code stops problems before they start

- Education beats playing catch-up with security holes

- Smart access limits and backup plans keep systems safer

Secure Coding Practices: The First Line of Defense

Every developer thinks their code is secure, until it’s not. We’ve seen it hundreds of times in our bootcamps – that moment when students realize how exposed their “bulletproof” code really is. Good code isn’t just about making things work, it’s about assuming someone’s trying to break in.

Take input validation (yeah, that thing most coders skip). A quick check might seem tedious, but our students have caught nasty injection attempts that would’ve slipped right through. It’s like locking your door – basic but essential.

Teams need ground rules too. When our advanced classes tackle group projects, we push these standards:

- Double-check every piece of data users send in

- Write code like someone’s looking over your shoulder

- Get another set of eyes on security-critical parts

Nothing fancy here. Just solid habits that keep the bad stuff out. Trust us, fixing security holes later costs way more than preventing them now.

Threat Modeling: Thinking Like an Attacker

Nobody likes meetings about what might go wrong. But after training thousands of developers, we’ve seen how skipping threat modeling leads to nasty surprises later. Sure, security teams handle the heavy lifting, but every coder needs to think like a hacker sometimes.

Picture this: A team starts building without thinking through attacks. Six months later, they’re scrambling to patch holes that were obvious from day one. We’ve watched it happen, and it ain’t pretty. Getting ahead of threats means asking tough questions early – like how someone might trick your authentication or mess with your data flow.

Key attack points to watch:

- User authentication weak spots

- Data validation gaps

- Access control flaws

- API vulnerabilities

- Third-party component risks

Teams who model threats don’t just build better – they sleep better too. And isn’t that worth a few extra planning sessions?

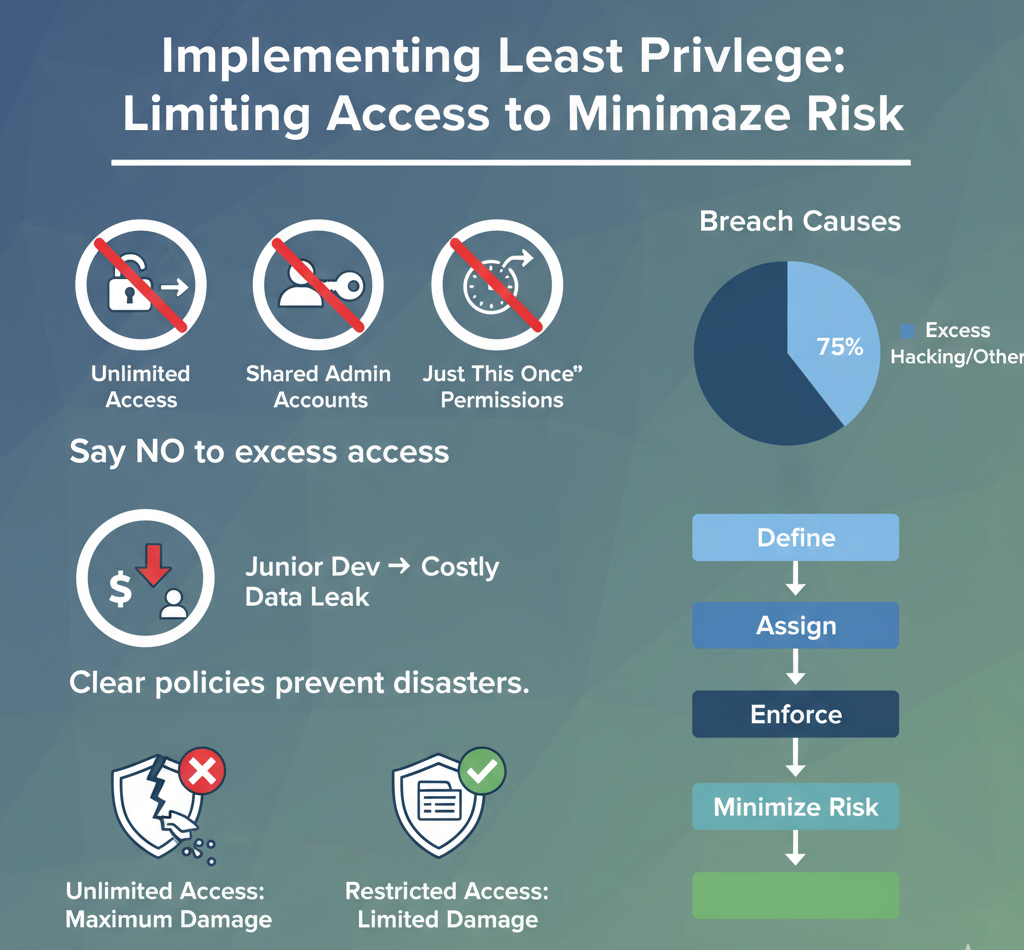

Implementing Least Privilege: Limiting Access to Minimize Risk

The hardest security lesson we teach isn’t about complex code or fancy tools. It’s about saying “no.” No to unlimited access. No to shared admin accounts. No to “just this once” permissions. Most breaches don’t come from clever hacks – they come from people having access they shouldn’t.

Some dev teams fight this at first. They want full access to everything, claiming it makes them more productive. But after showing them real incidents where excess privileges led to disasters, they get it. One client lost millions because a junior dev accidentally pushed sensitive data to a public repo. Could’ve been prevented with basic access limits.

Setting up proper access rules takes time. Teams need clear policies about who gets what permissions and when. But once it’s running, the security benefits are huge. Plus, when something does go wrong, limited access means limited damage.

Managing Dependencies: Guarding Against Hidden Vulnerabilities

Walk into any modern dev shop and you’ll find code built on hundreds of dependencies. Each one’s a potential time bomb. We’ve spent countless hours helping teams clean up after outdated packages let attackers in through the back door.

Smart teams don’t just grab packages blindly – they treat dependencies like security decisions. That means scanning everything that goes into production code. Automated tools help, but someone’s gotta actually read those security reports. One compromised package can poison an entire system.

Best moves for dependency defense:

- Lock down package versions

- Scan everything before production

- Keep a dependency inventory

- Watch security alerts

- Plan for emergency updates

Most security holes aren’t fancy zero-days – they’re known flaws in old packages nobody bothered to update. Regular maintenance isn’t exciting, but it beats explaining to the boss why customer data’s showing up on the dark web.

Continuous Integration and Automated Security Checks

Nobody likes finding security holes in production. Been there, fixed that, lost sleep over it. That’s why we push our students to let machines do the heavy lifting. Automated scans catch the dumb stuff before it hits production, and trust me, there’s always dumb stuff.[1]

Last month, a team’s automated scan caught a SQL injection flaw that slipped past three code reviews. These tools aren’t perfect, but they’re like having a security expert looking over your shoulder 24/7. And unlike humans, they don’t get tired or distracted.

Must-have security checks:

- Static code analysis (finds the obvious stuff)

- Dynamic testing (catches runtime weirdness)

- Dependency scanning (spots bad packages)

- Secret detection (no more leaked API keys)

- Container security scans (because containers leak too)

The best part? These checks run automatically, every time code gets pushed. No excuses, no shortcuts, no “I forgot to run the scanner.”

Security Education: Building Awareness and Culture

Teaching secure coding isn’t about scaring developers straight. It’s about showing them how attackers think. Every week our bootcamp sees lightbulb moments when coders realize their “clever” solution has a massive security hole.

Real security takes more than watching a yearly compliance video. Teams need hands-on practice breaking and fixing code. They need to understand why certain patterns are dangerous, not just that they’re bad. One team leader told us their breach attempts dropped 60% after getting their developers into regular security workshops.[2]

Security knowledge spreads like office gossip, one person learns something cool about breaking authentication, suddenly everyone’s talking about token validation. That’s the culture shift we’re after. When developers start sharing security tips during lunch breaks, you know something’s working right, and it shows the power of building a security awareness culture.

Incident Response Mindset: Planning for the Inevitable

Credit: Unscripted with David Raviv

Breaches happen. Full stop. After training hundreds of teams, we’ve learned that acceptance leads to better preparation. The best developers don’t just write secure code – they plan for when that security fails.

A good incident response isn’t about fancy tools or thick playbooks. It’s about knowing exactly what to do when things go sideways. Teams need clear steps, not panic. We’ve watched organizations lose precious hours during incidents because nobody knew who could approve taking a server offline.

Critical response elements:

- Clear command chains

- Detailed logging setup

- Quick rollback plans

- Communication protocols

- Recovery procedures

Perfect security doesn’t exist. But quick recovery? That’s achievable. And sometimes, that’s what matters most.

Collaboration Between Development and Security

Old school thinking pits security teams against developers, like they’re natural enemies or something. That’s garbage. After watching hundreds of projects crash and burn from this mindset, we started pushing for what actually works: getting these folks talking to each other.

Security people need to quit acting like the “department of no,” and developers need to stop treating security like it’s some annoying checkbox. Our most successful students are the ones who build bridges between teams. They share tools, grab lunch together, and actually listen to each other’s concerns.

Look, nobody wants to be the reason for a breach. When devs and security pros work as partners instead of opponents, magic happens. Code gets stronger, releases move faster, and everybody sleeps better at night.

One team we trained cut their security incidents by 70% just by having weekly cross-team standups. If you’re wondering how to improve your team’s security mindset, regular collaboration sessions are a proven place to start

Practical Security Mindset Examples

After years of training developers, certain patterns keep showing up in successful security programs. None of this is rocket science, but it’s amazing how many teams skip the basics while chasing fancy new tools.

The stuff that actually works:

- Check every single input like it’s toxic waste

- Map out threats before writing any code

- Lock down permissions like your job depends on it (because it does)

- Watch those dependencies like a hawk

- Let the machines do the repetitive security checks

- Keep learning – threats evolve, so should you

- Know what to do when (not if) something breaks

- Get dev and security teams talking – for real

Here’s the thing about security mindsets, they’re habits, not hobbies. Every bootcamp we run, every team we train, the message stays the same: do these things until they’re second nature. Because when things go wrong, you don’t rise to the occasion, you fall to the level of your training. That’s why cultivating a security mindset is so critical from the very start.

Conclusion

A security habit starts in the code, like breathing – can’t tack it on later when things break. Every person touching the software needs to think about risks, from day one through launch. It’s pretty basic stuff: check what goes in, watch who gets access, and run safety scans. When (not if) something goes wrong, you’ll want a plan ready. The whole team’s got to own it, just like quality. Safer code comes from making security part of the daily grind.

Ready to build security into your team’s DNA? Join our bootcamp and start writing safer code from day one, Join here.

FAQ

How do software security and application security connect with secure coding and threat modeling in real projects?

Software security and application security both rely on secure coding to block mistakes that attackers target. Threat modeling helps teams picture possible attacks before they happen so they can catch weak spots early. Together, they form the backbone of secure software development, making sure every step from design to delivery considers safety.

Why is risk analysis important in secure software development and shift-left security practices?

Risk analysis helps teams spot where trouble may come from before it strikes. In secure software development, pairing risk analysis with shift-left security means you fix issues at the start instead of later. This habit makes security awareness part of daily work, which saves time and keeps projects safe.

How do vulnerability management, penetration testing, code scanning, static code analysis, and dynamic code analysis work together?

Vulnerability management is about finding and fixing weak spots. Penetration testing simulates attacks, while code scanning checks for mistakes in written code. Static code analysis reviews code without running it, and dynamic code analysis looks at software while it runs. Used together, they give a full view of software health.

What role does a security champion play in building security culture and a security-first mindset?

A security champion is a team member who promotes security culture and spreads a security-first mindset. They encourage secure design choices, share knowledge, and keep security awareness alive. By guiding their peers, they make secure development feel natural, not forced.

Why are least privilege, secure SDLC, application integrity, data encryption, and access control important?

Least privilege limits access so people only see what they need. Secure SDLC keeps security in every stage of software. Application integrity makes sure programs are not changed in harmful ways. Data encryption protects secrets, while access control managers who can use data. Together, they prevent leaks and attacks.

How do threat prevention, threat detection, cyber resilience, and security automation work in daily software use?

Threat prevention blocks attacks before they happen, while threat detection alerts teams when something slips by. Cyber resilience means software can recover quickly after trouble. Security automation ties these tasks together, running scans and checks without slowing people down. This balance keeps systems strong and responsive.

References

- https://en.wikipedia.org/wiki/Continuous_integration

- https://en.wikipedia.org/wiki/Security_awareness