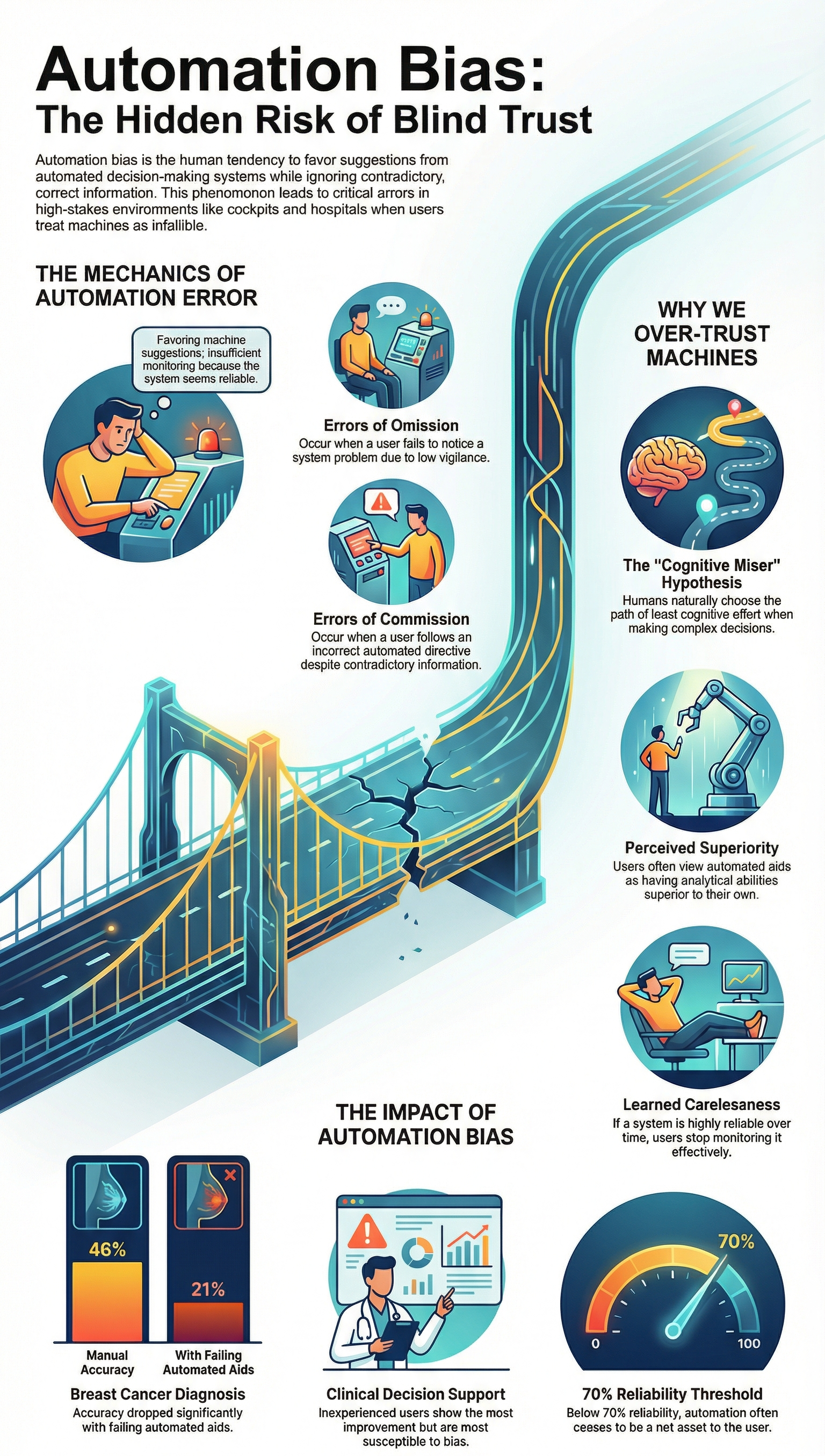

Yes, over-reliance on AI is a real and present danger. It’s not science fiction, it’s happening right now in hospitals, trading floors, and your own web browser. The core peril is automation bias, the uncritical acceptance of AI outputs because we assume the machine knows better. This leads to unchecked errors, atrophied skills, and a quiet surrender of our own judgment.

The evidence is mounting, from brain scan studies to leaked corporate memos. To understand the full scope is to arm yourself against it. Keep reading to see where the line between tool and crutch truly lies.

Key Takeaways

- Automation bias causes us to trust flawed AI outputs, leading to critical errors we miss.

- Outsourcing thinking to AI erodes professional skills and creative problem-solving capacity.

- A hybrid framework, with human oversight at the center, is the only sustainable path forward.

The Thinking Gap

Last week, I reviewed a junior developer’s code. It was clean and efficient, but wrong for our system. When I asked why he chose that logic, he shrugged. “The AI suggested it.” He hadn’t traced the flow himself or considered our specific edge cases, falling into the problem with complex AI-generated logic that can crash production.

We see this pattern everywhere.

“Automation bias refers to our tendency to favor suggestions from automated decision‑making systems and to ignore contradictory information made without automation, even if it is correct.” – Bryce Hoffman, Forbes. [1]

That gap between the clean model and the messy real world is dangerous. It’s where a rushed doctor might not question an AI’s diagnosis. It’s where an analyst might let a trading algorithm run without grasping the market mood it can’t see. We get a polished output and assume deep understanding. But the machine is just calculating. When we forget that, our own thinking muscles atrophy.

The Silent Erosion of Your Own Expertise

Automation bias is the start. When a tool seems competent, our brains stop questioning it. A 2024 study showed people using an AI assistant were much worse at spotting its mistakes. The tool’s presence dulled their critical instinct.

This trust creates a loop. We verify less, so we trust more. We see it in our bootcamp: a student uses AI to write a security function. The code looks right, but they miss a logic flaw because they didn’t trace it. They become an editor, not a builder.

| Effect | Description | Example |

| Automation Bias | Trusting AI outputs without questioning | Junior dev uses AI logic without tracing edge cases |

| Skills Atrophy | Decline in problem-solving or debugging ability | Missing logic flaw in security function |

| Expertise Bias | Undervaluing human judgment | Accepting AI recommendation over personal insight |

The final stage is “expertise bias.” We undervalue our own knowledge for the machine’s answer. Defending your nuanced judgment feels harder. For new developers, this is corrosive.

How do you build skill without the foundational struggle? The cost is clear. You stop building accurate mental models. Your ability to solve novel problems fades. You lose the instinct for when something feels wrong.

In security, that lost instinct is your first line of defense. It’s a profound professional vulnerability we train against every day. A short triage using linters, type checks, and tests catches most issues before deep investigation, helping developers avoid common pitfalls in secure coding.

When High Stakes Meet Black Boxes

In high-stakes fields, abstract risks become concrete. An AI diagnostic tool might suggest a common condition. A tired doctor, trusting its record, could miss a rarer one. That single error can cause a treatment delay. The tool’s usual accuracy makes its rare failures more insidious.

In finance, algorithmic models create feedback loops. If many firms use similar AI, they can turn a market dip into a crash. The 2010 Flash Crash showed this. Over-reliance removes human circuit breakers, creating a fragile system.

In security, a developer trusts an AI scanner to find every flaw, but these tools only see known patterns. Teams must understand how to handle unexpected AI code behavior to catch novel exploits before they slip through. We’ve seen teams miss subtle logic flaws because they relied on the scan. This over-reliance creates systemic dangers:

- Cascading Errors: One AI’s mistake feeds into another.

- Degraded Skills: Teams struggle when the tool fails.

- Homogenized Output: Everyone’s analysis starts to sound the same.

Your own judgment is the final, necessary layer of defense. We build that instinct here.

Why the Skeptics Are Your Best Guide

The most vocal critics of AI answers, biohackers, clinicians, veteran developers, aren’t afraid of tech. They know its limits intimately. In secure development, a model’s training data rarely includes our messy reality: legacy systems, unique threat models, and bizarre edge cases. We’ve learned to treat this skepticism as a crucial tool, not a complaint.

For us, the core principle is validation. Show us the exploit, trace the vulnerability. An AI’s proposed fix is just a probabilistic guess until we stress-test it. This mindset forces our team to ask the right question: “What did this model never see?” It centers the human context, the actual system under threat. [2]

When a senior engineer labels generated code “dangerous,” it’s our cultural immune response, defending against new vulnerabilities. We run every AI-assisted idea through a gauntlet of their experience. Their red flags are key:

- Assumptions of a perfect, standardized environment.

- Ignoring adversarial intent beyond the training data.

- Trading long-term security for short-term convenience.

Listening to the Right Critics

Credit: TED

The fear in expert communities isn’t job loss, but homogenization. “The output looks polished,” a developer said, “but it lacks the why.” The risk is creating practitioners who can use a tool without understanding the principle, like implementing encryption without grasping key management. We lose the nuanced judgment forged in debugging failures.

Our method is different. We use AI for drafting and brainstorming attack vectors, but never for our final voice. The gap between a model’s output and the reality of a live exploit is where our expertise lives. We demand proof, not prediction. We guard our perspective, the specific lesson from a past breach that makes training resonate. Our content embraces this messiness. The process is structured:

- Brainstorm: AI generates attack scenarios.

- Validate: Experts apply real-world constraints and incident data.

- Synthesize: We forge the final lesson from this collision, preserving the human narrative of discovery.

Building a Sustainable Hybrid Framework

Rejecting AI outright isn’t the answer. Recalibrating our relationship with it is. The goal should be augmentation, not full automation. This means designing workflows where AI acts as a powerful assistant, but never holds final authority. In secure development, this isn’t a nice-to-have; it’s a security requirement. We build structured human oversight into every process.

For our developers, this looks like using AI to suggest code blocks or flag common vulnerability patterns. But the critical next step is always manual. We review the logic ourselves, check for novel attack vectors the model might miss, and understand the full-stack implications of any change.

The AI is an advanced spell-checker, not the author. Our deep understanding of the system’s architecture is the non-negotiable core. We write the integration tests, we define the security parameters. A practical framework for us includes:

- AI Generates: First drafts of routine code, lists of potential threats.

- Human Curates: Apply contextual knowledge of our specific systems and threat landscape.

- Human Decides: Retain final approval on any code that touches production.

- Audit Regularly: We run manual penetration testing drills, bypassing AI tools entirely, to keep our fundamental skills sharp.

The Pilot, Not the Passenger

This hybrid approach turns AI from a potential crutch into a legitimate lever. It acknowledges the tool’s power while anchoring every process in human responsibility. It’s the operational difference between being a pilot and a passenger. Autopilot is invaluable, but you’d never want a pilot who forgot how to land the plane manually in a storm.

The same principle applies to how we create our training content. We use AI to overcome blank-page syndrome, to generate alternative explanations for a complex topic like cryptographic nonces. But then we ruthlessly edit, personalize, and inject the lived experience from our own labs and consulting work. The AI gives us raw material; we sculpt the final lesson.

Our judgment on what resonates with a working developer, what sticks, is the final filter. This preserves our team’s hard-won skill while boosting productivity. It prevents the slow atrophy of core knowledge. We’ve seen bootcamps that lean too hard on generated content; their graduates can talk about theory but falter in practical, messy scenarios.

Our framework is designed to prevent that. It ensures we, the experts, are always in the loop, making the final call that aligns with our standards for security and clarity.

FAQ

What are the main AI overreliance risks teams should watch for in daily work?

AI overreliance risks include skill degradation generative AI, overtrust AI errors, and cognitive atrophy AI. When people depend too much on automation, critical thinking loss AI and nuanced judgment AI fails can occur. Using hybrid AI workflows and structured authority AI balance keeps human oversight AI active, preserves expertise erosion ChatGPT, and prevents flawed decision-making.

How does automation bias dangers affect entry-level coder AI traps or developer overreliance risks?

Automation bias dangers can mislead beginners and experienced developers alike. Entry-level coder AI traps often result in flawed project outcomes AI and probabilistic reasoning gaps. Developer overreliance risks may cause hallucination propagation AI and generic output fatigue. Applying tactical AI rejection biohacking and manual logging superiority helps maintain accuracy and prevents skill erosion caused by excessive AI reliance.

Can social skill deterioration AI result from AI dependency syndrome or AI laziness confessions?

Yes. AI dependency syndrome can lead to social skill deterioration AI and emotional rants AI dependency. Overtrust AI errors and proxy conversation harms reduce real-world interactions and communication skills. AI laziness confessions show that people sometimes rely on generative AI instead of thinking. Regular human oversight AI and hybrid AI workflows help maintain social skills and nuanced judgment.

What precautions reduce hallucination propagation AI and unverified echo chambers AI effects?

Hallucination propagation AI and unverified echo chambers AI can distort decisions, spread misinformation, and create recency bias AI discussions. Cybersecurity AI pitfalls, AI scam manipulations, and romantic fraud AI chats are additional risks.

Following responsible AI hub reliance, verification protocol links, and empirical proof demands AI ensures content remains accurate while keeping humans in control of outputs.

How can cognitive atrophy AI and critical thinking loss AI be prevented in AI trend shaping 2026?

Cognitive atrophy AI and critical thinking loss AI happen when people overuse generative tools. AI homogenizing content and generic output fatigue reduce engagement and insight. Heeding lived experience AI warnings, providing skeptical pushback AI hype, and using hybrid AI workflows encourage active thinking. Focusing on augmentation not automation and AI trend shaping 2026 helps people retain skills while benefiting from AI responsibly.

Reclaiming Your Agency in the AI Age

The danger of AI overreliance isn’t that machines get smarter, it’s that we get lazier. Judgment, creativity, and critical thinking weaken when we let tools do the work for us. Use AI to handle repetitive or generative tasks, but reserve analysis and decisions for yourself.

Treat your mind like career insurance. What task did you do on autopilot this week that you’ll tackle manually tomorrow? Strengthen your skills at the Secure Coding Practices Bootcamp.

References

- https://www.forbes.com/sites/brycehoffman/2024/03/10/automation-bias-what-it-is-and-how-to-overcome-it/

- https://en.wikipedia.org/wiki/Automation_bias