AI-generated code needs structure and oversight to stay maintainable. It should be treated as a starting point, not a final deliverable. When teams assume it’s production-ready, refactoring costs rise and technical debt builds quietly. The safer approach is simple: enforce clear standards, review the output carefully, and rely on automation from day one.

That means defined coding rules, required tests, security checks, and consistent CI gates. These habits reduce cleanup work and make future changes easier. This guide breaks down practical steps based on real developer challenges. Keep reading to see how to scale AI support without creating long-term debt..

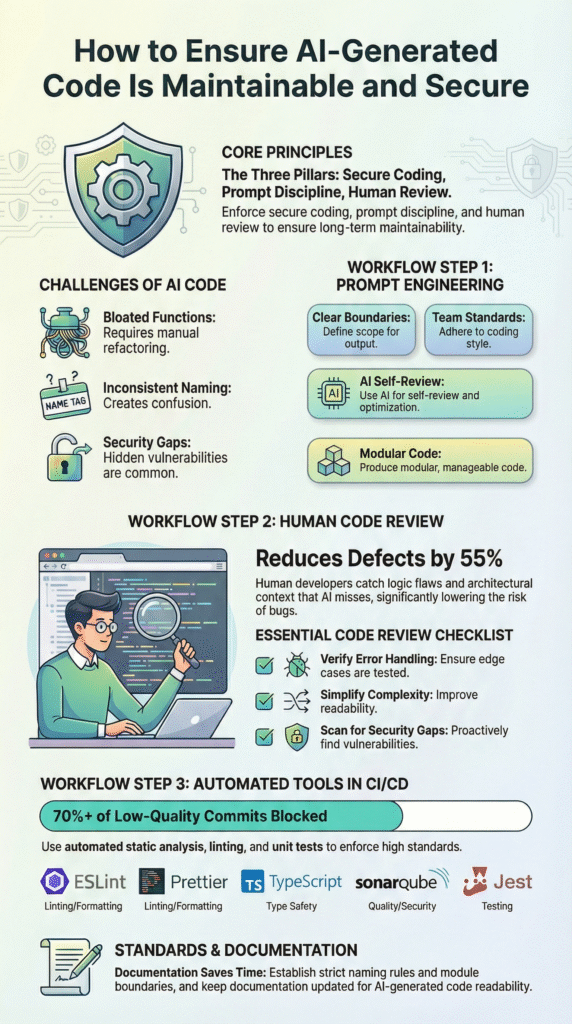

Core Principles for Maintainable and Secure AI-Generated Code

- Maintainable AI-generated code starts with Secure Coding Practices, enforced through prompts, reviews, and automated checks.

- Human review combined with automated analysis can cut AI-related defects by more than half.

- Clear standards and documentation turn AI from a liability into a sustainable partner for development.

What Makes AI-Generated Code Hard to Maintain?

AI-generated code often becomes a maintenance headache. It can have inconsistent structure, poor readability, and hidden bugs. These problems usually come from vague instructions and a lack of checking. Many of these issues mirror what are the main risks of vibe coding, where speed and convenience quietly override structure and long-term thinking.

About 60% of developers said AI-written code required more refactoring work in 2024. The tools are fast, but that speed hides long-term costs when the output ignores our team’s architecture and conventions.

We’ve lived this. Early prototypes looked good and passed tests. But a month later, onboarding new team members was painful. File boundaries were messy, naming was inconsistent, and error handling was missing. The code worked, but nobody wanted to touch it.

Another common issue is hidden coupling. The AI optimizes for making a single piece work, not for overall system clarity. This leads to huge functions, copied logic, and violations of good design principles that only show up when we need to change something.

The most frequent failures we see are:

- Bloated functions that are hard to follow.

- Inconsistent naming that confuses everyone.

- Silent security gaps, like missing input checks.

These aren’t flaws in the AI itself. They’re failures in our process.

How does Prompt Engineering Improve Maintainability?

Good prompts enforce our standards. They reduce bloat and guide the AI toward modular, readable code that fits our existing architecture.

Our internal tests last year showed detailed prompts cut rework by 30–40%. The models respond to clear constraints, not just loose instructions. Many teams struggle with the same challenges and common pitfalls when they treat prompting as an afterthought instead of part of engineering discipline.

We learned this the hard way. Asking for “build an API endpoint” gave us fragile code. When we changed our prompts to embed Secure Coding Practices, the output improved immediately. The AI started producing smaller functions, clear error handling, and better interfaces.

A maintainability-focused prompt does three things:

- It sets clear boundaries.

- It encodes our team’s standards.

- It tells the AI to review its own work before showing it.

This approach also reduces the “AI slop” effect, code that works but is messy and hard to maintain. By setting limits on file size or function length, we prevent a lot of that debt.

In practice, an effective prompt includes:

- Direct references to our coding style guide.

- A request for small, modular functions.

- Instructions to generate tests and documentation with the code.

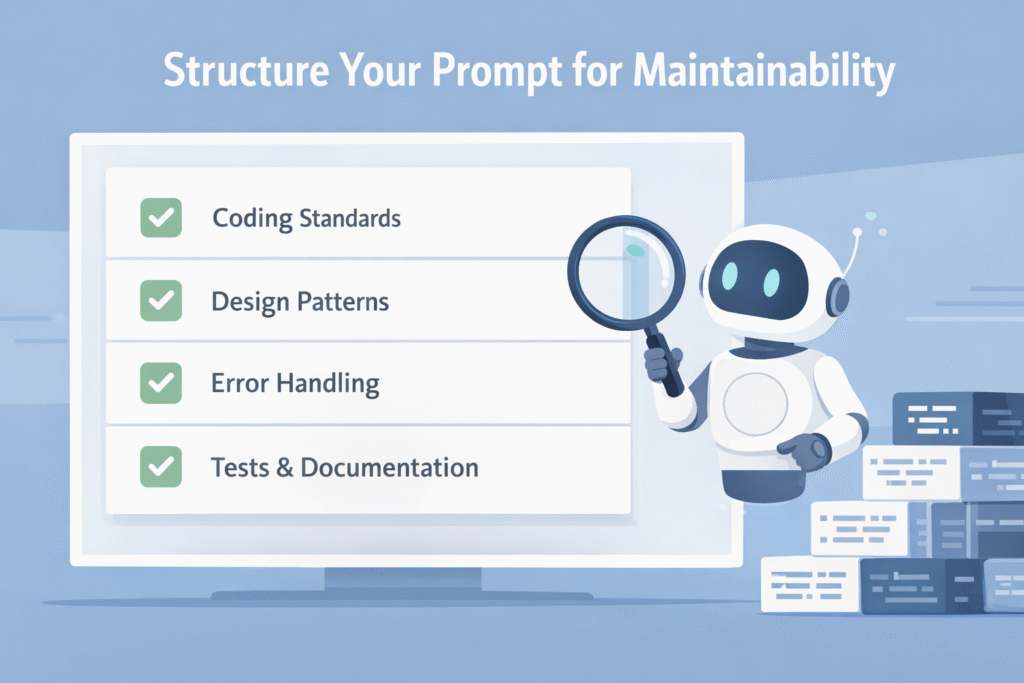

What Should a Maintainability-Focused Prompt Include?

A prompt that’s meant to protect maintainability has to do more than ask for working code. It needs to set expectations upfront, in plain language, the same way a senior developer would during a handoff.

From our experience, the order matters. When we put Secure Coding Practices first, before the feature request, the AI tends to respect them instead of treating them as optional. We keep a small set of shared prompt templates for this reason. Teams that reuse them usually get more consistent results and fewer surprises during review.

A solid, maintainability-focused prompt usually includes:

- Clear coding standards, including naming rules and file structure

- Design patterns to follow, and patterns that should be avoided

- Rules for error handling, logging, and input validation

- Constraints around dependencies, performance, or readability

- A final instruction asking the AI to point out possible code smells or risks

This kind of structure reduces tangled dependencies and makes the code easier to change later. It also shifts the AI from “just make it work” toward “make it safe, clear, and maintainable.”

Why Must AI-Generated Code Always Undergo Human Code Review?

AI output is a draft. Human review catches logic flaws, edge cases, and long-term design risks the model will miss.

“AI cannot understand business context because that information often lives outside the codebase… An LLM can validate that code is syntactically correct, but it has no understanding of the why behind the code” – Greg Foster.

Studies show peer review reduces defects by about 55%. That reduction is even more critical for AI-assisted code.

We treat the AI like a very energetic junior developer who doesn’t remember what it coded yesterday. That keeps expectations realistic. Reviewers shouldn’t just check if the code runs; they need to ask if it will make sense six months from now.

Human reviewers spot problems the AI glosses over: race conditions in async code, overcomplicated abstractions, subtle performance traps. These rarely show up in basic tests.

Reviews also create accountability. Without a clear owner, AI-generated commits become anonymous blobs that erode code quality fast.

Our review checklists focus on:

- Readable syntax and consistent naming.

- Clear modular boundaries and test coverage.

- Alignment with our Secure Coding Practices and threat models.

What Should Reviewers Specifically Look For?

When reviewing AI-generated code, it helps to look past the simple question of “does it run?” The real work is judging how easy it will be to read, change, and protect six months from now. Code that technically works can still be expensive to maintain or risky to ship.

We’ve seen that many AI-related bugs don’t come from complex logic, but from unclear intent and weak structure. Security issues are especially easy to miss. AI often underplays threats like SQL injection or unsafe input handling. Teams that lack clear processes for how to handle unexpected AI code behavior usually discover these gaps too late, during refactors or production incidents.

A practical review checklist usually includes questions like:

- Is error handling clear and consistent across the code?

- Are edge cases explained, tested, or at least acknowledged?

- Does this introduce complexity that isn’t really needed?

- Could the same result be achieved in a simpler way?

The point isn’t to distrust AI output. It’s to treat the code as something we’re responsible for long term. That mindset, stewardship over speed, keeps small issues from turning into costly ones later.

Which Automated Tools Help Enforce Maintainability Standards?

Static analysis, linting, and automated tests catch code smells, bugs, and rule violations before code gets merged.

In teams using automated enforcement, CI quality gates blocked over 70% of low-quality commits. As explained by the Kovair Blog,

“Enforce Standards: To ensure style coherence, use programs like ESLint, Prettier, and Black… Take Advantage of Static Analysis and Typing – Tools such as TypeScript, mypy, and SonarQube can detect errors early” – Roy M.

We make linting and static analysis non-negotiable gates. This is where maintainability becomes measurable. Metrics on complexity, duplication, and test coverage turn subjective debates into data.

Automated tools also push back against the AI’s tendency to overproduce. If the linting fails, the AI’s output fails. That feedback loop is powerful.

Here’s how different tools map to common risks:

| Tool Category | Primary Risk Addressed | Outcome |

| Static analysis | Code smells, vulnerabilities | Early detection |

| Automated linting | Style drift, readability | Consistent syntax |

| Unit tests | Logic regressions | Safer refactors |

| Integration tests | System failures | Production confidence |

Layered checks, a principle from error prevention research, work far better than relying on a single control.

How Should These Tools be Integrated Into CI/CD?

When adding these tools to CI/CD, the key idea is consistency. Every commit should be checked the same way, without exceptions. If problems are allowed to slip through “just this once,” technical debt builds up fast and becomes harder to fix later.

In our setups, AI-generated code goes through the exact same pipeline as human-written code. No special treatment. If linting fails, the merge stops. If tests are missing or broken, the build fails. Security checks always run in the background. This approach keeps quality from depending on who, or what, wrote the code.

A simple and reliable CI/CD flow usually looks like this:

- Run linters and formatters on every pull request

- Execute unit and integration tests before merges are allowed

- Enforce static analysis score thresholds as hard gates

- Trigger security scans automatically on each change

This kind of fail-fast automation catches issues early, when they’re cheaper to fix. It also lowers long-term risk by preventing small problems from becoming part of the codebase. Over time, the pipeline becomes a quiet but effective guardrail for the entire team.

How do Coding Standards and Documentation Reduce AI Technical Debt?

Clear standards give AI something concrete to aim for. Good documentation does something just as important: it explains the why to the people who will touch the code later. Without that context, even clean code can become confusing fast.

We’ve seen teams bring new developers up to speed much faster when documentation is treated as part of the work, not an extra task. When it doesn’t, developers end up guessing intent, which slows everything down and leads to mistakes.

Standards also make prompts more reliable. If the AI knows exactly how code should be structured and named, the results become more consistent across features and teams.

The standards that tend to matter most include:

- Enforced naming, formatting, and style rules

- Clear boundaries between modules and responsibilities

- A requirement to update documentation whenever code changes

Documentation isn’t busywork. It protects intent, reduces rework, and helps the whole team move faster with fewer misunderstandings.

What Documentation Should AI be Required To Generate?

When AI makes a meaningful change to the code, it should also leave a paper trail. Documentation isn’t optional here, it’s part of the deliverable. Without it, teams end up spending time later trying to reverse-engineer decisions that were never written down.

We’ve found it helps to ask the AI to explain why the code exists, not just what it does. That small shift leads to clearer comments and fewer questions during reviews. Code that comes with solid tests also breaks less often, especially when edge cases are involved.

At a minimum, AI-generated code should include:

- Inline comments for logic that isn’t obvious at first glance

- Updates to the README or architecture notes when behavior or structure changes

- Tests that cover edge cases, failure paths, and error handling

This approach lowers the mental effort required to understand the system later. It also reduces guesswork during maintenance and makes future changes safer. In the long run, requiring documentation alongside code saves time, prevents regressions, and keeps the codebase understandable for everyone.

Why do Developers Report Language-Specific Maintainability Differences?

Developers often notice that AI-written code feels easier to maintain in some languages than others. That’s not random. AI tends to follow the habits and expectations baked into each language’s ecosystem. Some languages push structure and clarity by default, while others leave more room for messy outcomes.

From developer feedback, languages with strong conventions usually need less cleanup. Java, for example, encourages explicit structure and naming, so AI output often ends up easier to refactor later. In contrast, frameworks like Angular can lead the AI toward large, all-in-one components if prompts aren’t very specific.

Here’s how teams commonly describe the differences:

- Java: Verbose but organized, which makes refactoring easier

- C#: Often introduces too many helper layers, creating moderate technical debt

- Angular: Tends to produce large components, increasing long-term maintenance cost

The takeaway isn’t that one language is better than another. It’s that prompts and standards need to match the language. When expectations align with the ecosystem, AI-generated code becomes far more manageable.

What Ongoing Processes Keep AI-Generated Code Maintainable Over Time?

Credits: Next-Gen Engineering

Keeping AI-generated code healthy over time takes more than good prompts at the start. Without regular checks, quality slowly fades. Small shortcuts pile up, and before long, teams are facing large refactors that could have been avoided.

The teams that do best treat AI as part of their engineering system, not a quick productivity trick. They track simple maintainability signals, watch for rising complexity, and review patterns over time. We run regular audits that look at maintainability, security, and overall structure. These reviews aren’t about blame. They’re checkpoints that help recalibrate how the AI is being used.

Effective long-term practices usually include:

- CI/CD integration with automated quality gates like tests and static analysis

- Iterative feedback loops, where the AI reviews and improves its own output

- Shared prompt templates to keep style and structure consistent across teams

- Periodic security audits guided by Secure Coding Practices

When automation is paired with steady oversight, quality improvements tend to stick. Teams that invest in these habits avoid constant rewrites and keep their AI-assisted codebases easier to maintain over the long run.

FAQ

How can I improve AI-generated code maintainability from the start?

We can improve AI-generated code maintainability by setting clear rules before any code is written. Use strong prompt engineering code practices and shared prompt templates maintainable across the team.

Require modular AI functions, readable AI syntax, and consistent naming AI. Apply coding standards enforcement from the first commit. When we treat AI output as a draft and review it carefully, long-term maintenance becomes easier and more predictable.

What should I check during a code review of AI output?

During a code review AI output process, we should check structure, clarity, and risk. Look for code smells LLM patterns, DRY violation AI problems, and overengineered AI fixes. Review error handling AI logic and confirm that edge case AI misses are addressed.

Watch for security vuln AI code, including SQL injection AI risk. A strong review reduces technical debt AI instead of approving code that only works short term.

How do static analysis and linting improve LLM code quality?

Static analysis AI code tools find structural issues before they reach production. Automated linting AI enforces formatting and consistent naming AI rules on every commit. CI/CD AI gates prevent weak changes from merging.

Type checks, complexity limits, and test coverage AI thresholds strengthen LLM code quality. These tools create steady guardrails that reduce maintenance costs over time.

How can I reduce technical debt AI introduces over time?

We can reduce technical debt AI by reviewing patterns early and often. Watch for refactoring AI slop and rising cyclomatic complexity AI. Require unit tests AI generated and integration tests AI for important features.

Use self-review AI prompts and iterative code refinement before merging. When teams practice human-AI hybrid dev and pair programming AI, quality improves and debt grows more slowly.

What security risks should I monitor in AI-generated code?

We should monitor common security vuln AI code patterns in every change. Pay close attention to SQL injection AI risk, XSS vuln codegen, and weak error handling AI. Review dependency hell AI problems and API design AI flaws.

Run security checks inside CI/CD AI gates consistently. Careful review reduces exposure and protects long-term AI-generated code maintainability.

Building Long-Term Maintainability into AI-Generated Code

Keeping AI-generated code maintainable is not about slowing teams down. It is about aiming that speed in the right direction. We have seen projects move fast at first, then stall under cleanup work when discipline was missing. The teams that hold up over time lead with Secure Coding Practices and clear standards.

In our bootcamp sessions, we show developers how to blend human judgment with automation. Treat AI as a collaborator, not autopilot. Join us here.

References

- https://graphite.com/blog/ai-wont-replace-human-code-review

- https://www.kovair.com/blogs/code-quality-and-maintainability-in-an-ai-assisted-coding-environment/