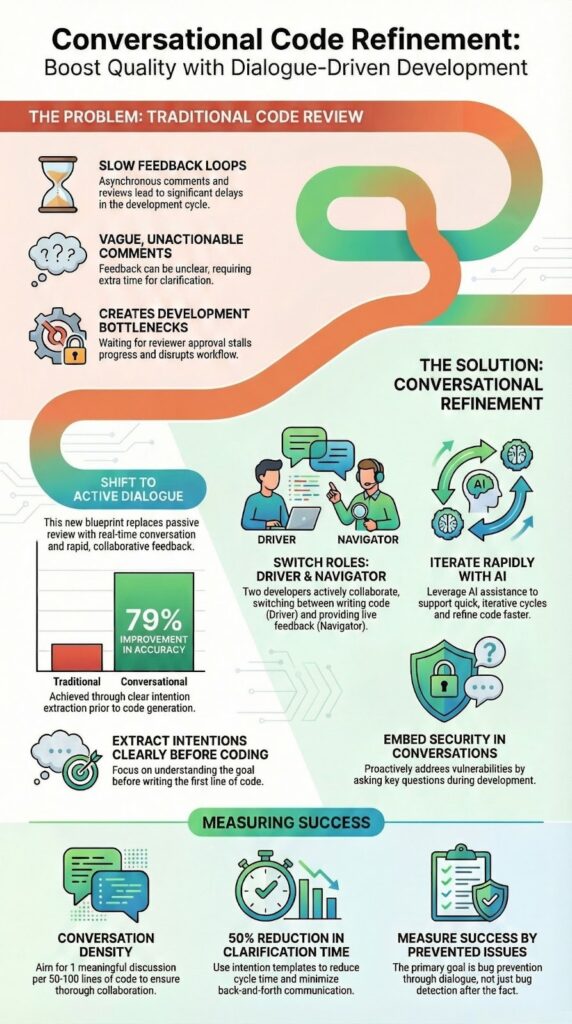

Conversational code refinement makes reviews feel like collaboration instead of judgment. Instead of pull requests idling for days and coming back with vague style complaints, you get fast, focused dialogue around real design and logic. The goal is simple: share intent, ask questions, and shape the solution together.

That kind of back-and-forth catches subtle bugs early, spreads context across the team, and turns reviews into small mentoring sessions instead of roadblocks. It feels closer to structured pair programming than a gate. If you’ve ever felt stuck waiting on review purgatory, keep reading.

Key Takeaways

- Structure refinement around intention extraction before code generation

- Maintain balanced dialogue between driver and navigator roles

- Implement rapid iteration cycles with targeted AI assistance

The Problem: Inefficient Code Review Creates Technical Debt

You can feel when code review is hurting more than helping. Pull requests sit untouched, feedback shows up late, and when it does, it’s often about commas, not correctness.

Here’s how broken review habits create quiet technical debt:

- PRs wait days for review, so context fades and small changes grow stale.

- Comments target style or naming, while security, data flow, and failure paths slip by.

- Juniors hold back, afraid of being judged, not guided.

- Seniors treat review like a chore, not a design conversation.

The real cost shows up later. Bugs that slip past review are far more expensive in production than at the pull request stage. Vague notes like “clean this up” or “improve performance” don’t share intent, they force guesswork. People respond, push another commit, and hope it passes.

Without explicit questions like “who validates this input?” or “what happens when this queue spikes?”, teams lean on silent reading and assumptions. That’s where security gaps and performance problems sneak through. Conversational refinement flips this by making the reasoning, tradeoffs, and worries visible, so review becomes shared thinking instead of quiet inspection.

| Aspect | Traditional Code Review | Conversational Code Refinement |

| Review Timing | Delayed, often days after submission | Fast, near real-time discussion |

| Feedback Focus | Style, formatting, minor issues | Logic, intent, edge cases, security |

| Knowledge Sharing | Limited, mostly implicit | Explicit and shared through dialogue |

| Junior Developer Experience | Fear of judgment | Guided learning and mentorship |

| Long-Term Impact | Accumulates hidden technical debt | Reduces bugs and design flaws early |

Solution: Conversational Code Refinement Builds Quality Through Dialogue

Conversational code refinement treats review as a live discussion, not a cold inspection. Code improves most when people share intent, ask direct questions, and adjust together in small steps.

In practice, that usually looks like:

- Short, focused review sessions instead of giant, once-a-week PRs

- Clear questions from reviewers: “What failure mode are you worried about here?”

- Developers explaining their tradeoffs out loud, not just through code

This rhythm works whether you’re pairing with another engineer or an AI assistant. Small changes get quick feedback, so you can zoom in on one function’s error handling, then zoom out to talk about architecture patterns without losing the thread.

The real strength sits in how it reveals thinking. When someone asks “why this caching strategy?” the answer does more than defend a choice. It exposes edge cases, clarifies assumptions, and gives everyone shared context. The reviewer understands the constraints, the author spots gaps in their own logic, and the team builds a common mental model.

Over time, those conversations turn review from a gate into a learning loop, where quality comes from shared reasoning, not silent approval.

Core Principles of Conversational Refinement

You get better results when you treat refinement as a clear, shared process instead of guesswork.

1. Extract Intentions Clearly Before Generating Code

Strong refinements start with, “What exactly should change, and why?”

Instead of vague notes, turn feedback into specific intentions. This level of prompt clarity keeps reviewers and AI aligned on scope, intent, and expected outcomes before any code changes begin.

- Direct changes: “Replace the current auth check with this OAuth flow.”

- Reversions: “Go back to the previous error handling here.”

- General improvements: “Optimize this query for heavy load.”

Hybrid methods help: simple rules catch obvious patterns like reversions, while AI models interpret softer feedback like “this feels slow” into something concrete, such as “reduce time complexity by changing the sorting approach.”

2. Facilitate Collaborative Communication Through Role Switching

Borrow the driver–navigator model from pair programming. One person codes, the other guides, and they switch often. Narration helps: “I’m adding validation to block injection attacks,” makes the reasoning visible. Good reviewers ask questions instead of giving orders, “What if this receives null?” lands better than “Add null checks.”

Security-First Refinement: Building Protection Through Dialogue

Security gets missed when it’s treated as a rare skill or a final gate, not as part of everyday conversation. Conversational refinement pulls security into the core of how you talk about code.

Instead of vague “check security” comments, you use pointed questions shaped by effective prompting, such as:

“How does this endpoint handle malformed or hostile input?”

- “How does this endpoint handle malformed or hostile input?”

- “What scope should be allowed to call this function?”

- “Could this error message leak internal details?”

Those prompts force everyone to think in terms of threats, not just features. Security shows up in design talks (“What are the likely attack paths here?”), in implementation reviews (“Are we sanitizing this input before the database?”), and in final passes before release.

The value of dialogue is how it exposes hidden assumptions. When someone says, “I assumed the gateway handles rate limiting,” that’s a clear moment to verify the setup instead of hoping it’s true. Many real bugs get blocked right there, in the conversation, before they become incidents.

Measuring Refinement Success: Beyond Lines of Code

Credits : Anthropic

You can’t judge conversational refinement by lines changed or comment counts alone. Those numbers rarely tell you whether the process made the code safer, clearer, or easier to work on.

More useful signals tend to look like this:

- Conversation density: how many real, technical discussions happen per change set, especially when teams write prompts for AI coders that encourage focused, technical dialogue instead of broad commentary.

- Knowledge transfer: whether juniors start raising security, performance, or design points on their own, and whether the same lesson appears in fewer pull requests over time.

- Cycle time breakdown: how long work sits idle versus how long people spend in focused discussion. Intention templates usually cut clarification time sharply.

There’s also a simple, blunt measure: how often a conversation prevents a later issue. A short log of “caught a race condition here,” or “spotted a risky query there,” builds a quiet record of wins. Over months, that record shows whether your refinement habits are just polishing code style, or actually making your system steadier and safer.

FAQ

How does conversational code refinement improve collaboration between humans and AI?

Conversational code refinement improves collaboration by combining pair programming principles with AI collaboration. Developers explain intent clearly, while the AI offers context aware edits and suggestions. Active listening coding and structured feedback loops reduce misunderstandings. Iterative code review helps align logic with goals, lower error rates, and support an effective human AI hybrid workflow.

What role do structured prompts play in refining code through conversation?

Structured prompts guide the AI to focus on the correct problem and expected outcome. Through prompt engineering, end to end prompting, and rule based extraction, developers communicate code intention precisely. This reduces ambiguity, prevents trivial fix avoidance, and delivers actionable feedback. Clear prompts improve semantic understanding and lead to consistent, maintainable code improvements.

How can pair programming concepts be applied to conversational code refinement?

Pair programming concepts apply through clear driver navigator roles during conversational refinement. The driver focuses on implementation, while the navigator reviews logic, highlights risks, and offers constructive criticism. Regular role switching ensures balanced participation. This approach supports collaborative debugging, logic walkthroughs, and edge case discussion without depending on specific tools or environments.

How do feedback loops and reviews enhance code quality in conversations?

Feedback loops and iterative code review strengthen code quality by enabling rapid iterations and phased code analysis. Developers discuss code diff analysis, reversion suggestions, and targeted single line edits. These conversations surface code quality metrics, test coverage gaps, and security review concerns, leading to informed decisions and reliable error reduction strategies.

How can conversational refinement support long term maintainability and learning?

Conversational refinement supports long term maintainability by prioritizing readability enhancements, code standards alignment, and documentation integration. It also enables knowledge transfer coding through mentoring sessions and junior developer guidance. Session logging and progress tracking encourage continuous improvement loops, helping teams maintain scalable, understandable, and future ready codebases.

Making Conversational Refinement Work for Your Team

Start small by introducing intention templates for your most common review cases. Keep a shared doc with real examples that turn vague notes into concrete requests, so “this feels slow” becomes “reduce complexity with a better sorting approach.” You can also warm up by practicing verbal narration during pair programming, saying your reasoning out loud, before

Conversational refinement turns review from a chore into a shared problem-solving ritual. The code gets better, but the team grows sharper, more aligned, and more secure in how they build. If you want to bring that same mindset to security and ship safer code from day one, check out the Secure Coding Bootcamp.

References

- https://dev.to/ed_dfreitas/the-impact-of-code-review-on-development-workflow-balancing-quality-and-speed-16g2

- https://en.wikipedia.org/wiki/Code_review