If-else logic doesn’t scale. Piling conditions on conditions creates brittle systems that crack as soon as new data appears. Every exception demands another rule, and each rule raises maintenance cost, slows execution, and obscures intent. Over time, teams stop understanding why changes break things, because behavior is scattered across hundreds of branches.

This approach is not intelligence; it is scripting pushed past its limits. The real burden is not writing the first condition, but keeping the thousandth one consistent, tested, and fast. That costs compounds until progress stalls. To see why this fails and what works better, keep reading now.

Key Takeaways

- Complex conditional logic leads to unmanageable “logic sprawl” that is impossible to fully test or debug.

- This rigidity creates massive technical debt, consuming developer time on maintenance instead of innovation.

- The performance costs are real, from CPU inefficiencies to crippling latency in live systems.

The Logic Trap

We started with a simple rule: show a dollar sign for U.S. users. Then we needed Canada, then the Eurozone. Before long, we were twelve levels deep, tangled in regional taxes, currency formats, and holiday sales. The paths multiply.

Ten conditions? Over a thousand paths. Twenty? More than a million. It’s a dark forest; nobody knows what’s lurking in the thickets. This is a combinatorial explosion. Testing it all is impossible.

Maintenance became a nightmare. We’d spend weeks tracing why one user in one specific scenario broke. Fix a rule for Europe, and you’d break pricing for Australia. The logic is so tightly woven that pulling one thread unravels a sweater on the other side of the world. Developers grew afraid to touch it. They’d just patch around the edges, adding yet another special case, because the core had become a minefield.[1]

This is a combinatorial explosion, and it’s one of the most persistent common secure coding challenges in large rule-based systems, where testing every possible path quickly becomes impossible.

Look at what happens:

- Debugging turns into a detective story with no end.

- Missed edge cases create weird, inconsistent AI in production.

- The mental load burns out good engineers.

- Readability dies after three nested if statements. It’s spaghetti. If a developer can’t follow the logic in thirty seconds, they’ll probably break it. The system we built became a black box, even to us.

The Hidden Costs of Maintaining Rule-Based AI

Rule-based AI doesn’t just get built; it gets inherited. Like a house with endless patchwork repairs, its logic becomes a tangle of “if-then” branches. We’ve seen it firsthand, a single change to a discount rule breaks three others in the checkout flow. Suddenly, 60% of a sprint is spent on debugging, not building. That’s the technical debt trap: your roadmap stalls because the foundation is made of sand.

The real cost is in the hours. Senior engineers babysitting conditionals, playing whack-a-mole with conflicts that emerge from a system nobody can fully picture anymore. The opportunity is lost. You could have built something new, but instead you’re tracing through 100-layer nested logic, adding print statements just to see where the logic failed. The machine’s complexity becomes your burden.

When overlapping rules start producing arbitrary outcomes, the risks of vibe coding surface quickly, leaving teams to maintain behavior they can no longer clearly reason about.

And the edge cases? They’re inevitable.

- A rule for “premium users” gives a discount.

- A rule for “clearance items” forbids discounts.

- What happens for a premium user buying clearance?

Without a clear priority system, the AI picks arbitrarily. Users see inconsistency, trust erodes, and you’re left maintaining a rickety structure that can’t make up its own mind.

How Brittle Logic Sabotages Performance

Complex logic doesn’t just break; it bogs everything down. Modern processors are prediction engines, guessing which path your code will take next. Deeply nested “if-else” branches make a mockery of that. The CPU guesses wrong, flushes its pipeline, and what should be a nanosecond check stalls for twenty times longer. You’re literally wasting silicon cycles before your AI even gets to think.

We see this in the latency. Every conditional check, for a user flag, a content rule, a compliance flag, adds a few milliseconds. Run fifty checks before a request hits your core model, and those milliseconds stack into whole seconds of delay. In our work, that’s the difference between a snappy tool and one users abandon.

The resource waste is brutal. You’re paying for expensive GPU time to run a powerful model, then throttling it with cheap, slow conditional code. It’s like using a supercomputer but forcing it to write with a quill. The bottlenecks aren’t in the intelligence; they’re in the bureaucratic plumbing we built around it.

Scalability Versus Rigid Conditional Chains

Scalability isn’t about handling more traffic; it’s about handling more complexity. A rule-based system hits a wall. You simply can’t hire enough developers to manually code for every nuance of human language or every new edge case. The system is rigid, so when business needs to shift, it breaks.

We’ve seen the cost curve firsthand. Look at the comparison:

| Feature | Rule-Based (If-Else) | Machine Learning (Probabilistic) |

| Adaptability | Rigid; needs manual code changes | High; learns from new data patterns |

| Data Handling | Struggles with ambiguity | Excels at finding hidden nuances |

| Growth Cost | Increases exponentially with rules | Scales more linearly with compute |

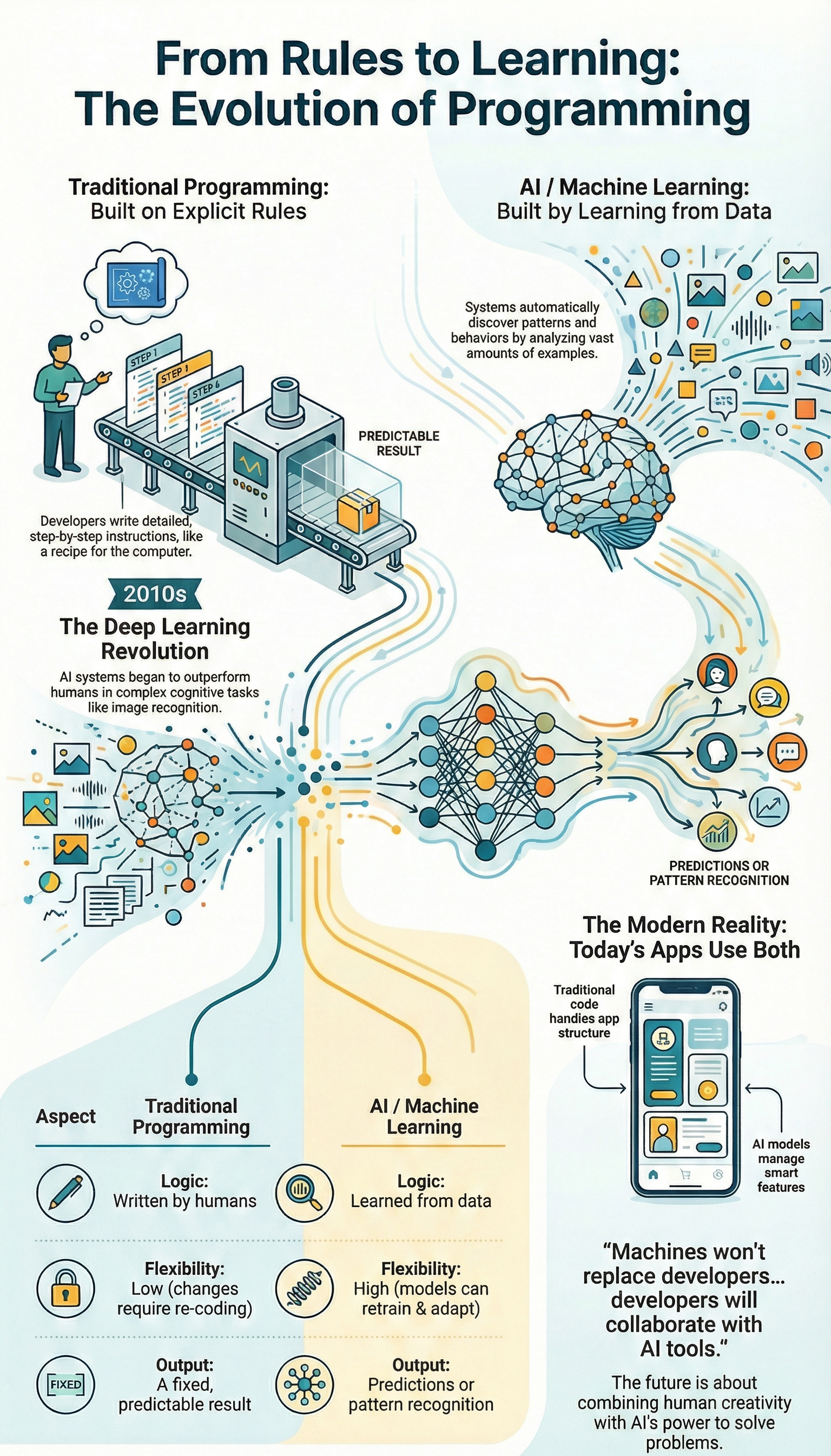

Every new rule interacts with every old one. The maintenance overhead doesn’t just grow, it explodes. The probabilistic model, in contrast, gets better with more examples. It generalizes. It adapts.

That lack of adaptability is the core failure. The real world is messy and ambiguous. A rigid system sees uncertainty and either picks randomly or fails. A learning system can operate in the probability space, offering a probable answer with a confidence level. For us, that’s the difference between a constant drain and a true asset.

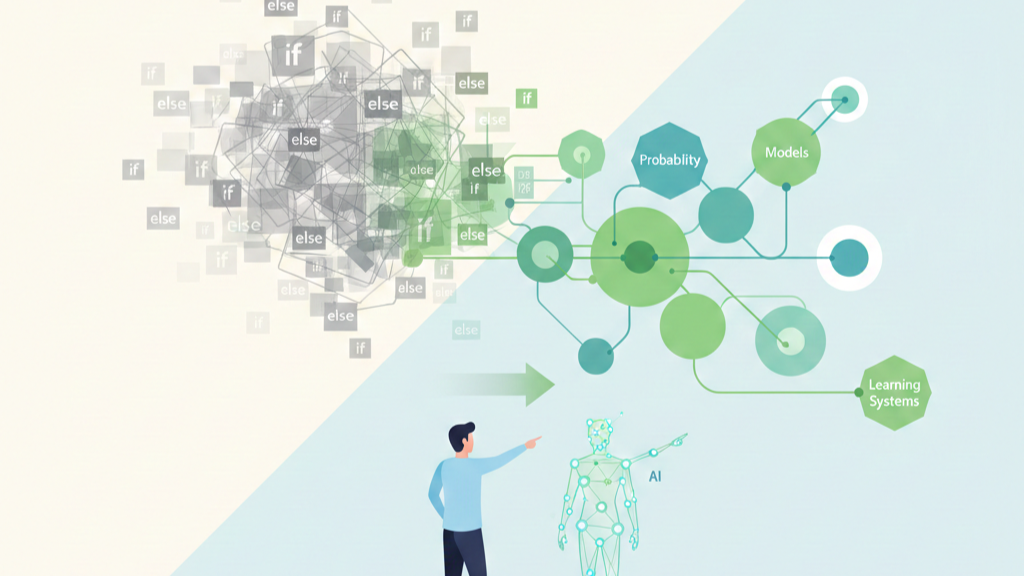

The Shift in Mindset

Credit: Box

The way out starts with a change in perspective. You stop thinking in absolute, rigid paths and start thinking in likelihoods, probabilities, and meaning. Instead of “if this exact phrase, then that,” you ask, “what is this user’s intent most similar to?” This transition from deterministic rule-chains to probabilistic reasoning is what lets AI finally handle the uncertainty of the real world.

Our first move is always to find the “logic hotspots.” You look for the files where if-else chains are nested five levels deep, or where the cyclomatic complexity score is a nightmare. These are the prime candidates. Don’t try to fix everything at once, attack the most painful, most frequently changed parts of the system. The immediate payoff in reduced bugs and clearer code fuels the rest of the work.

From a security and maintainability standpoint, this shift is crucial. We’re building systems that are resilient to change and clear in their intent. By cutting down on hardcoded branches, we shrink the attack surface for logical errors and make the whole codebase easier to audit and reason about.

“Complexity kills. It sucks the life out of developers” — Robert C. Martin [2].

Systems dominated by deep conditional logic quietly widen the skill divide on a team, making avoiding the programming skill gap as much an architectural concern as an educational one.

Practical Steps Forward

So what does this look like in practice? You replace static rules with semantic understanding. For example, instead of twenty rules checking for different ways to ask for the weather, you create a single vector embedding of the query and compare it to a known “weather inquiry” embedding.

If the similarity is high enough, you trigger the weather flow. You’re not checking keywords; you’re comparing meaning. This one move can erase hundreds of lines of brittle code and handle synonyms or typos you never wrote a rule for.

Here are a few concrete patterns we use to dismantle conditional complexity:

- LLM-based classification: A small, fast model can categorize user intents with high accuracy, capturing nuances rigid code would miss.

- Explicit state machines: For defined processes, a clear state machine kills nested conditionals for workflow control. The state is obvious, and transitions are explicit.

- The strategy pattern: Encapsulate whole algorithms behind a common interface and select the right one at runtime.

The outcome is tangible. You start writing code that declares what you want, not how to navigate a labyrinth of “if” statements to get there. Your team spends time curating data and refining models, not wrestling with boolean algebra. The system becomes adaptable, almost on its own.

FAQ

Why does complex if-else logic fail as AI rules increase?

Complex if-else logic grows into nested conditionals and conditional chaining very quickly. This causes decision tree explosion and combinatorial explosion. Rule-based AI then develops code maintainability issues, scalability limitations, and readability problems. Debugging challenges increase, testing complexity rises, and refactoring difficulties appear. Over time, logic sprawl becomes spaghetti code and full if-else hell.

How do nested conditionals lead to incorrect AI decisions?

Nested conditionals increase cognitive complexity and cyclomatic complexity. Small edits often introduce conflicting conditions and rule conflicts. Edge case handling becomes unreliable and causes brittleness in AI. Priority resolution and rule ordering issues are difficult to track. These problems lead to poor generalization, inflexibility, and rigid decision making in real situations.

Why is rule-based AI difficult to maintain over time?

Rule-based AI relies on hardcoded rules and manual rule updates. This creates a maintenance nightmare and leads to developer fatigue. Knowledge acquisition bottleneck slows system growth. Expert system pitfalls appear as exponential growth rules increase rule fatigue. These non-adaptive systems lack learning and cannot respond well to new or changing data.

How does complex if-else logic reduce system performance?

Large rule sets cause performance bottlenecks and CPU cycle waste. Branch prediction failure increases misprediction costs. Algorithmic complexity rises with boolean nesting and switch statement limits. Backtracking inefficiency adds delay. These issues reduce speed while still failing at uncertainty handling and incomplete data problems.

What design approaches can replace if-else hell effectively?

Polymorphism alternatives, strategy pattern use, state machine design, and decision table approach reduce logic sprawl. These methods improve structure and readability. Data-driven decisions reduce lack of learning. Machine learning superiority allows systems to adapt. Developers must manage model interpretability tradeoffs and explainable AI needs where transparency is required.

Moving Past the Logic Sprawl

Complex if-else logic in AI isn’t minor technical debt, it’s an architectural dead end. It promises control but delivers fragility, shrinking with every new rule. We cage systems in brittle decision trees instead of using what machines do best: probability, similarity, and learning at scale.

The fix isn’t removing logic, but elevating it into models and data. Start with your worst offender, map its paths, then replace it with smarter patterns. Ready to build resilient systems? Join the Secure Coding Bootcamp

References

[1] https://csrc.nist.gov/pubs/sp/800/218/final

[2] https://martinfowler.com/articles/designDead.html