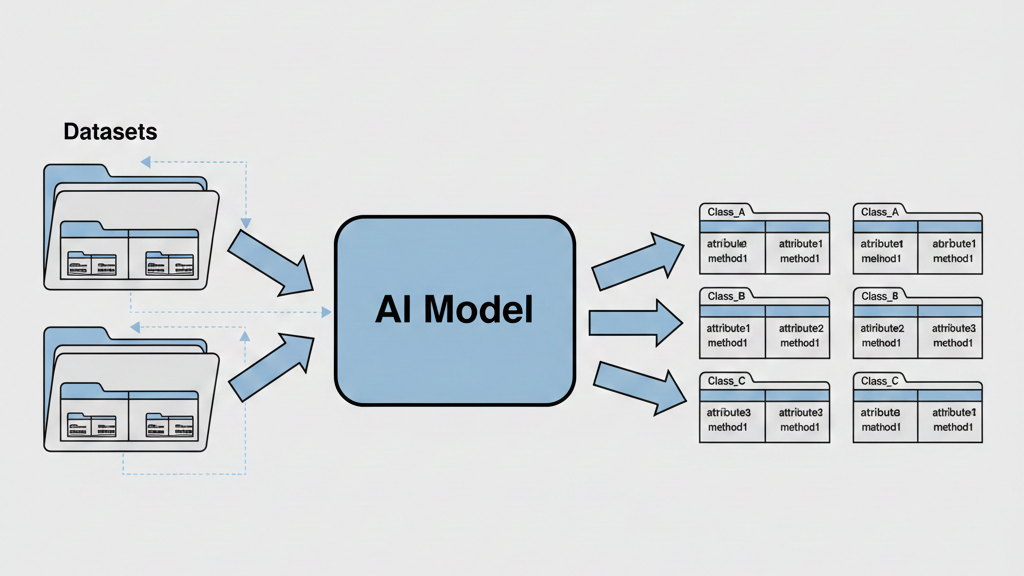

AI generates duplicative classes because it’s mimicking the repetitive patterns in its training data, and its token-by-token generation can get stuck in loops. The model isn’t thinking about architecture; it’s statistically predicting the next most likely piece of code, which often is a repeat of what came before.

It’s like a pianist who only knows one riff, playing it over and over because the notes feel familiar and safe. This leads to bloated, hard-to-maintain code that can introduce subtle bugs and security gaps. If you want to understand why your generated code looks so repetitive and how to steer it toward cleaner output, keep reading.

Key Takeaways

- AI’s training on common, repeated code patterns directly causes it to generate redundant classes and functions.

- The mechanics of token prediction often trap models in repetitive loops, especially with simpler decoding methods.

- Mitigating this requires both smarter generation techniques and a developer’s critical eye for refactoring.

How Training Data Patterns Trigger Class Duplication

It starts in the data. We train models on oceans of public code, where boilerplate configs, DTOs, and static utilities are the tide. To the AI, frequency is probability. Ask for a helper class, and you get the statistical average of every helper it’s ever seen, not something new.

Smaller models get stuck. Their repetition scores can hit nearly 50% for three-word sequences. It’s a short loop. You see it in API tools: reuse a schema title, and the model might output EntityMapping1 and EntityMapping2 to dodge a naming conflict. The logic inside is identical. It’s following a rule, not applying understanding.

As the original Attention Is All You Need paper puts it:

“The Transformer is the first transduction model relying entirely on self-attention to compute representations of its input and output.” – Vaswani et al. [1]

This kind of overfitting is not just a code quality issue, it is one of the challenges and common pitfalls in secure coding, where duplicated logic quietly increases complexity and weakens auditability. The model is so trained on the common template it can’t deviate.

The common sources:

- Boilerplate configs

- Data Transfer Objects

- Static utility classes

The sign? Multiple classes with similar names and the same core logic. Our move is to recognize the template and consolidate it. Don’t let the machine’s statistical habit add bloat to your own systems.

Why Autoregressive Decoding Causes Repetitive Loops

The generation process itself reinforces this. These models work autoregressively. They predict the next token, then the next, building output one piece at a time. It’s a bit like walking blindfolded, feeling for the next stepping stone.

If the stones are all the same, you’ll just walk in a circle. The model gets a high-probability sequence, like a standard class definition, and the mechanics of its prediction can make it hard to break away.

Greedy search, a common decoding method, amplifies the issue. It always picks the single most probable next token. There’s no exploration, no “what if.” This lack of variety is a straight path to repetition. Studies show that in severe cases, 89.9% of these repetitive outputs will continue until they hit the maximum token limit, at which point they’re just truncated.

You’re left with a file that’s half useful code, half the same three lines repeated ad infinitum. Even more advanced techniques like beam search or top-p sampling, which allow for some variation, don’t fully eliminate the risk. They just make the loops wider and more subtle.

The Primary Repetition Patterns in AI-Generated Code

It’s not random. Empirical studies have cataloged over twenty distinct repetition patterns. They generally fall into a few categories that you’ll start to recognize. The most obvious is block-level duplication. This is where entire functions or classes are regurgitated verbatim.

You might get two calculateTotal methods in different parts of the codebase, doing the exact same thing. Then there’s statement-level redundancy. Think repeated field assignments in constructors or nearly identical lines setting up configuration parameters.

| Pattern Type | What It Looks Like | The Real-World Impact |

| Block-level | A whole Logger class defined twice. | Kills readability and makes refactoring a nightmare. |

| Statement-level | The same setTimeout call is copied across ten event handlers. | Unnecessarily bloats the code size and obscures intent. |

| Near-duplicates | Two test methods where only the input parameter changes. | Creates a false sense of coverage and complicates maintenance. |

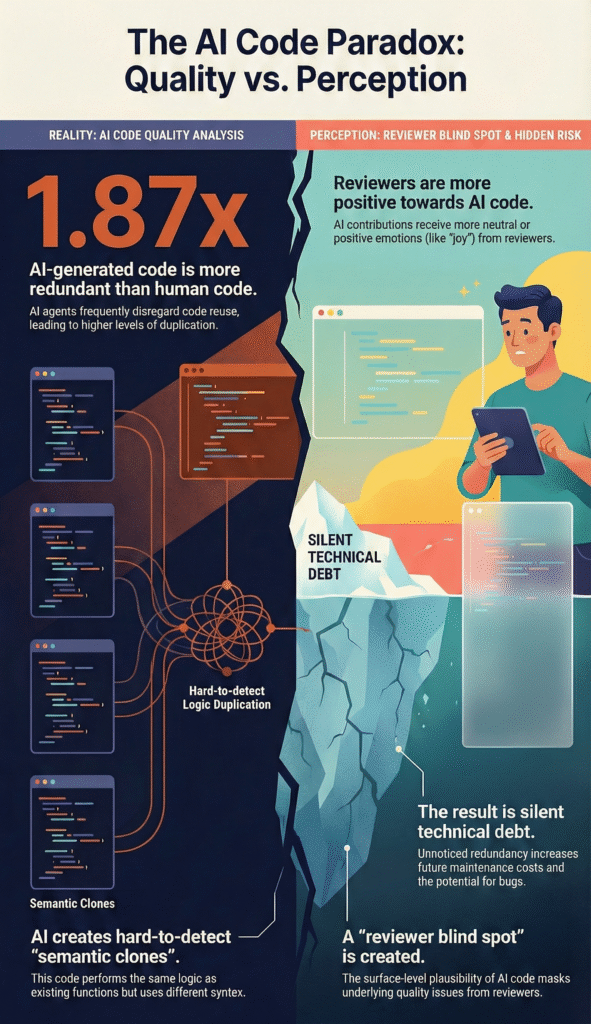

The most insidious might be the near-duplicates. The logic is 95% the same, but a variable name or a parameter is different. The AI interprets this as a new requirement, but a human sees wasted effort. This is where the gap between AI and human coding is stark. Analysis of human-written code shows a ground-truth rep-3 rate around just 3.3%. We naturally refactor. We see repetition and consolidation. The AI, left to its own devices, does not.

Model Size and Instruction Tuning’s Impact on Residency

Credit: Trena Little

Not all models are equally prone to this. The scale and the training approach matter a great deal. It’s intuitive, maybe. A larger model has a broader view, more parameters to capture nuance. It’s less likely to get stuck on the most trivial pattern because it has a deeper well of context to draw from. Instruction tuning is the other key factor.

A model fine-tuned on specific tasks, like “generate concise, non-repetitive code,” learns that repetition is an undesirable output.

The performance difference is measurable. Take the WizardCoder-15B model, which is instruction-tuned. Its rep-line metric, tracking repeated lines of code, sits at a low 1.3%.[2]

Compare that to a smaller, base model without that tuning, and the repetition rates can be orders of magnitude higher. The instruction acts as a guiding hand, subtly steering the model away from the easy, repetitive path and toward more diverse and appropriate structures. It learns the intent behind “write a class,” not just the common syntax for one.

- Larger Parameter Counts: Provide more “working memory” to avoid simple loops.

- Instruction Tuning: Teaches the model coding best practices, like DRY principles.

- The Trade-off: Larger, tuned models require more resources but produce cleaner first drafts.

When the AI Starts Repeating Itself

You see the same class pop up again, a glitch in the loop. We’ve all been there. The fix starts with how you ask. Prompt engineering matters. Don’t request “a class to validate email.” That’s a dead end.

Ask for “a single, reusable Validator class for email, phone, and username.” You’re giving it the blueprint it needs. Tweak the technical knobs, too, nudge the temperature or top-p sampling higher. It adds a bit of randomness, breaking the model’s predictable pattern.

Then comes cleanup. Our tools and our judgment work together here. Algorithms like DeRep scan code, find duplicate blocks, and prune them, keeping just the original. The data’s clear: this can cut repetition by 80% to over 90%.

It’s a huge efficiency gain. And it makes the code work better. Studies show this pruning can more than double the Pass@1 rate, the odds the code runs right the first time. Cleaner code is more reliable code. Less clutter, fewer bugs hiding in the shadows.

This is where many teams fall into the main risks of vibe coding, accepting repetitive or bloated output because it “looks right,” without questioning the underlying structure or security impact.

The Human in the Loop

The most important strategy, though, lives outside the machine. It’s the human review. We treat every AI-generated block as a first draft, a strong suggestion, but never the final word. Our job is to edit, to synthesize. This is where our real expertise in threat models and risk analysis kicks in. We look at that draft and start asking our security-focused questions:

- Does this duplication increase our attack surface?

- If there’s a flaw in this repeated logic, does it now exist in five places instead of one?

By refactoring three separate validator classes into one coherent component, we’re not just being neat. We’re actively reducing complexity, which is a bedrock principle of security. It makes the code easier to audit, to test, and to harden against emerging threats.

Understanding how to avoid the programming skill gap is critical here, because only developers with strong fundamentals can recognize duplication, consolidate logic, and reduce security risk introduced by AI-generated code.

The AI hands us the raw clay. We’re the ones who shape it into a secure, maintainable structure. That’s the partnership, it gives us speed, we provide a secure design.

FAQ

Why does AI code duplication happen during class generation?

AI code duplication happens because models follow high-probability sequences during token prediction. LLM repetition patterns form when training data overfitting and neural network code bias push similar structures repeatedly.

Autoregressive decoding loops reuse familiar templates, which causes class generation flaws, block-level duplicates, and near-duplicate code. Smaller model exacerbation increases exploration deficiency and function repetition issues.

How do decoding methods increase code generation redundancy?

Code generation redundancy increases when greedy search artifacts limit exploration. Autoregressive decoding loops repeat the same patterns, which leads to statement-level redundancy and infinite repetition truncation.

Beam search mitigation reduces this risk but does not eliminate it. Top-p sampling variety encourages diverse structure mimicry and lowers repeated class layouts in long outputs.

How can teams measure and detect repeated AI-generated code?

Teams measure repetition using n-gram repetition metrics and rep-3 score analysis. These methods identify block-level duplicates, statement-level redundancy, and near-duplicate code.

Static analysis tools such as SonarQube duplicates reports help validate findings. Python duplicate detection, Jupyter notebook redundancy checks, and code corpus analysis support empirical repetition studies.

What techniques reduce AI-generated duplication before production?

Post-generation deduplication removes repeated blocks using DeRep algorithm pruning. Instruction tuning benefits reduce pattern replication bias and non-tuned model risks. Model size impact matters because smaller model exacerbation increases repetition.

Output length limits, refactoring necessities, and generated code annotation improve code maintainability tradeoffs and address DRY principle challenges.

Why does duplicated code hurt quality, security, and content goals?

Duplicated code causes code quality degradation and readability reduction. It increases cybersecurity code flaws and weakens defense in depth coding, which limits attack surface reduction.

OpenAPI schema duplicates, EntityMapping collisions, and naming collision avoidance failures break integrations. For SEO code generation keywords, keyword stuffing avoidance and topical relevance boost support answer engine optimization.

Steering the Echo Toward Originality

Duplicative classes aren’t a broken tool; they’re an echo of how AI is built and prompted. Models reflect patterns, and decoding can amplify repetition into redundancy. The fix isn’t avoidance, it’s better editing. Understand why repetition happens. Prompt with intent. Choose stronger models. Clean up after generation.

Then do the real work: refactor, consolidate logic, and enforce DRY. That final pass turns probabilistic output into secure, maintainable software. AI supplies the clay; your judgment shapes it. Ready to sharpen that skill? Join the Secure Coding Bootcamp.

References

- https://arxiv.org/abs/1706.03762

- https://arxiv.org/abs/2306.08568