You ask a question, and a machine answers like a person. It seems like magic, but conversational AI programming is a careful craft. It stitches together several smart systems to understand, think, and reply. We build these systems by processing language, managing conversation flow, and learning from every chat.

This article strips away the mystery and shows you the gears turning inside a modern AI assistant. You will see how intent is spotted, how context is remembered, and how responses are crafted on the fly. Keep reading to how conversational AI programming work.

Key Takeaways

- It breaks down language to find your goal and key details.

- It manages the chat flow, remembering what was said before.

- It generates a natural reply and learns from each interaction.

The Foundation: From Sound to Sense

It starts with your voice or your text. If you speak, an automatic speech recognition system kicks in. It turns the sound waves of your voice into written words. This is a tough job, background noise can mess it up. But modern systems are pretty good at it, they get it right most of the time in quiet rooms.

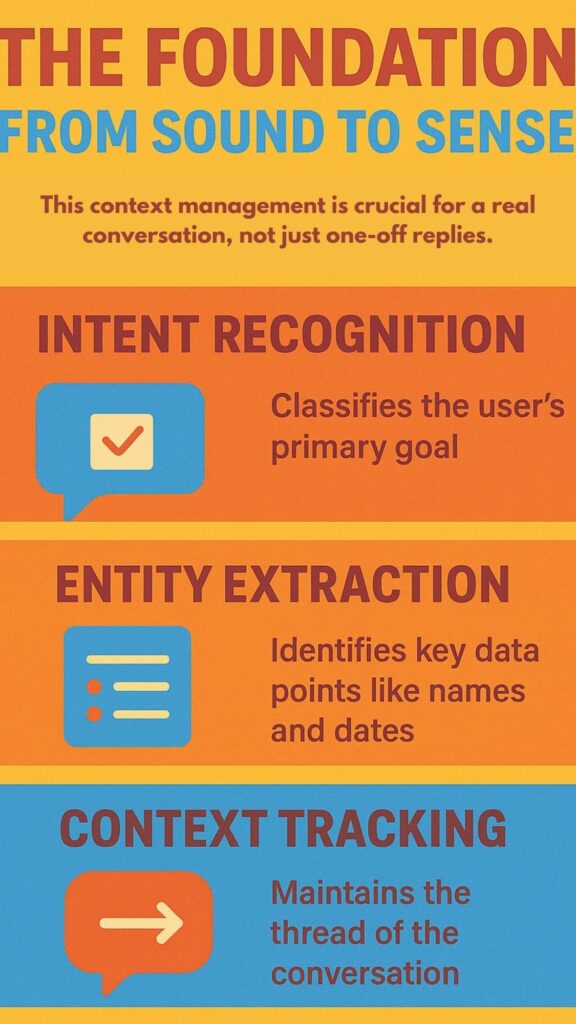

Once you have text, the real work begins. The system needs to understand what you mean, not just what you said. This is natural language understanding. It’s the brain of the operation. First, it figures out your intent. Are you asking a question, making a request, or just being chatty. This is called intent recognition.

Then, it looks for specific pieces of information, the entities. If you say “Book a flight to Paris tomorrow,” the intent is “book_flight.” The entities are “Paris” (a location) and “tomorrow” (a date). The system pulls these out so it knows what to act on.

It also checks the context. Did you mention Paris earlier? Is this part of a longer booking process? This context management is crucial for a real conversation, not just one-off replies.

- Intent Recognition: Classifies the user’s primary goal (1).

- Entity Extraction: Identifies key data points like names and dates.

- Context Tracking: Maintains the thread of the conversation.

The Conversation Manager: Keeping the Chat on Track

After understanding you, the AI needs to decide what to do next. This is dialogue management. Think of it as the conductor of an orchestra. For simple tasks, a rule-based system works fine. If the user says A, then say B. But life is rarely that simple. What if the user changes the subject? What if they give half an answer?

That is where machine learning takes over. More advanced systems use reinforcement learning. They try different responses and learn from which ones lead to a successful outcome, like a happy user.

The most modern systems use large language models, LLMs. These models, trained on huge amounts of text and its in adaptive design principles found in vibe coding.

They are not just following rules, they are generating a plausible next turn based on everything they know.

This part of the system handles dialogue state tracking. It keeps a running tab of what has been agreed upon, what information is still missing, and what the goal of the conversation is. Without this, every exchange would feel like starting from scratch. It is what allows for a multi-turn dialogue that actually makes sense.

Crafting the Response: From Thought to Words

Now the AI knows what to say, but how does it say it? This is natural language generation. There are two main ways. The simple way is template-based. The system has a pre-written sentence with blanks. It fills in the blanks with the entities it extracted. “Your flight to [city] is booked for [date].” It is clear and reliable, but it can sound robotic.

The more advanced way is neural generation. Here, the AI uses a model, often a transformer model, to create the response word by word. It is not just filling slots, it is writing a new sentence that fits the context and sounds natural.

This is how you get those surprisingly human-like replies from the latest chatbots. The downside is that it can sometimes hallucinate, or make up information, which is a big focus for developers right now.

If the conversation is happening by voice, the text response then goes to a text-to-speech system. Old TTS sounded like a robot.

New TTS, powered by neural networks, can produce voices that are smooth and expressive, almost indistinguishable from a human recording in some cases (2). This completes the loop, turning digital thought into spoken word.

The Learning Loop: Getting Smarter Every Day

A static AI is a dumb AI. The best systems learn from their interactions. This is often done through reinforcement learning from human feedback, or RLHF. When a conversation goes well, that is a positive signal. When it goes poorly, that is a negative signal. The system uses these signals to adjust its internal models over time, getting better at satisfying users.

Developers also fine-tune models. They take a general-purpose AI and train it further on specific data, like medical journals or legal documents, following a refinement process similar to the vibe coding fundamentals.

This creates a specialist that is much more accurate in its domain. This entire process requires a robust infrastructure for logging conversations and analyzing performance metrics like intent accuracy and user satisfaction.

- Feedback Integration: Uses successful interactions as positive reinforcement.

- Model Fine-Tuning: Adapts general models for specialized tasks.

- Performance Analytics: Tracks metrics to identify areas for improvement.

Building a Modern AI Assistant

So what does it take to build one of these today? The technology stack has gotten much more accessible. On the backend, transformer models like BERT and GPT do the heavy lifting for understanding and generation.

They often work with vector databases to store conversational context efficiently, allowing the AI to remember facts in what coding philosophy is as a development workflow.

Frameworks like PyTorch and TensorFlow are the tools of choice for the machine learning parts. And for putting it all into a real product, cloud platforms offer services that handle much of the complexity, from speech recognition to dialogue management.

This allows smaller teams to build powerful assistants without managing all the underlying infrastructure themselves.

We always prioritize secure coding practices from the very first line. This means building in safeguards to protect user data from the start, not adding them as an afterthought. It is about thinking like a guardian of information, ensuring privacy and security are foundational, not optional.

Why This All Matters

Source: IBM Technology

The goal is not to trick people into thinking they are talking to a human. The goal is to create a tool that is genuinely helpful. By understanding how conversational AI programming works, you can see its potential. It is about making technology more accessible.

Instead of learning complex software, you can just talk. This can automate customer service, provide companionship, or help people with disabilities interact with the digital world.

The market for this technology is exploding because the value is real. It is not just a novelty anymore, it is a practical tool that is getting better every day. The shift from rigid, rule-based scripts to fluid, context-aware dialogues is a fundamental change in how we interact with machines.

FAQs

What Is Conversational AI Programming?

Conversational AI programming is the process of creating systems that can understand and respond to human language. It uses tools like natural language understanding, dialogue management, and natural language generation to make conversations feel smooth.

These parts help the AI figure out what the user wants, follow the conversation, and give a helpful reply. The main goal is to let people talk to software naturally, the same way they talk to another person.

How Does Intent Recognition Work in AI Assistants?

Intent recognition is the step where the AI figures out what the user wants to do. Machine learning models look at the user’s message and classify it as a request, question, or command. This tells the AI what action to take next.

Modern transformer models improve this process by examining context and meaning, even when the user uses casual or indirect language. This makes the assistant more accurate and reliable.

Why Is Context Tracking Important in Conversations?

Context tracking helps the AI remember earlier parts of the conversation. Without it, every message would feel disconnected or repetitive.

By keeping track of earlier details,like names, dates, or ongoing tasks,the AI can respond naturally and continue the conversation smoothly. Dialogue state tracking and vector storage make this possible, helping the system follow multi-step exchanges just like a human would in real conversation.

What Role Do Large Language Models Play in Chatbots?

Large language models are the main engine behind modern chatbots. They are trained on huge amounts of text, which helps them generate replies, detect intent, and extract useful information.

They allow chatbots to move beyond simple scripts and handle both straightforward and open-ended questions. Because of their understanding of tone and structure, these models make conversations feel more natural and engaging for users.

How Do Chatbots Generate Natural-Sounding Responses?

Chatbots generate natural responses through natural language generation. Instead of using rigid templates, neural models create sentences one word at a time, based on the conversation. This helps the AI respond in a flexible and human-like way.

These systems can adjust tone, clarify details, or show emotion. Developers improve these models constantly to reduce mistakes and keep replies accurate, so the chatbot sounds both natural and trustworthy.

What Is Reinforcement Learning in Conversational AI?

Reinforcement learning helps chatbots improve by learning from experience. After giving a response, the system receives feedback showing whether the reply was helpful or not.

The AI uses this information to adjust its behavior over time. This method works well for assistants that handle customer questions or guide users through tasks. When paired with human feedback, reinforcement learning makes conversations smoother and more accurate.

How Does Speech Recognition Fit Into Conversational AI?

Speech recognition turns spoken words into text so the AI can process them. Neural networks analyze sound waves and identify the words being said, even with accents or background noise.

Once the audio becomes text, the system can understand intent and context. High-quality speech recognition is important for voice assistants, smart devices, and automated phone systems. It makes hands-free communication easy and natural for users.

What Technologies Are Needed To Build a Conversational AI System?

A conversational AI system uses several technologies working together. Transformer models like GPT and BERT handle the understanding and generation of language. Machine learning frameworks like TensorFlow and PyTorch help developers train these models.

Vector databases store memory from the conversation. Cloud platforms simplify hosting and scaling. Security tools and performance analytics help maintain accuracy, safety, and improvement over time. Together, these tools create a powerful AI assistant.

How Do Developers Reduce Hallucinations in AI Responses?

Developers reduce hallucinations by training models on trusted data, setting strict rules for outputs, and grounding responses in real information. Retrieval-augmented systems pull facts from reliable sources before generating answers.

Human feedback also guides the model toward better accuracy. Regular monitoring helps catch patterns that lead to incorrect results. All these methods help the AI stay factual and avoid making up information.

Why Is Conversational AI Important for Businesses Today?

Conversational AI is important for businesses because it automates support, saves time, and improves customer experience. It gives users quick answers, handles simple tasks, and stays available all day.

This reduces the workload for human agents and speeds up service. AI systems also collect insights from conversations, helping companies improve their services. As the technology grows more advanced, it becomes a strong tool for customer service, efficiency, and business growth.

Your Next Step on How Conversational AI Programming Work

The architecture is complex, but the concept is simple. It is about teaching machines to listen, think, and speak in a way that serves us. From processing sound waves to generating empathetic responses, each layer of technology builds towards a more natural interaction.

The future is not about machines that can hold a perfect philosophical debate, it is about machines that can reliably help you book a flight, troubleshoot a problem, or find a recipe.

That is the real achievement of conversational AI programming. It is a bridge between human need and digital capability. The technology will keep evolving, becoming faster, cheaper, and more reliable.

The core idea, however, will remain. Understand the user, manage the conversation, and provide a useful response. Now that you know how it works, you can start thinking about how you might use it. What problem could a conversational AI solve for you?

And if you want to go a step further, not just building tools, but building them securely, Secure Coding Practices Bootcamp is a strong next move. It’s a practical, developer-focused course that teaches real secure coding skills through hands-on sessions, labs, and real-world examples.

References

- https://medium.com/@aiinisghtful/intent-recognition-using-a-llm-with-predefined-intentions-4620284b72f7

- https://www.forbes.com/sites/parmyolson/2017/11/03/this-startups-artificial-voice-sounds-almost-indistinguishable-from-a-humans/